- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

A person dear to you has been diagnosed with amyotrophic lateral sclerosis (ALS) or another devastating disease that puts their ability to speak at risk. If only you could bank their voice,

you think. It’s now possible. The Personal Voice accessibility tool that arrives this month with Apple’s free iOS 17 software upgrade for iPhones allows people to create an

authentic-sounding, albeit slightly robotic replica of their voice — before they lose the ability to speak. The tool, which healthy people can take advantage of as well, works in tandem with

another new iOS 17 feature called Live Speech. The feature lets people type phrases they want spoken aloud, which can be voiced with the synthetic Personal Voice. Apple built the feature

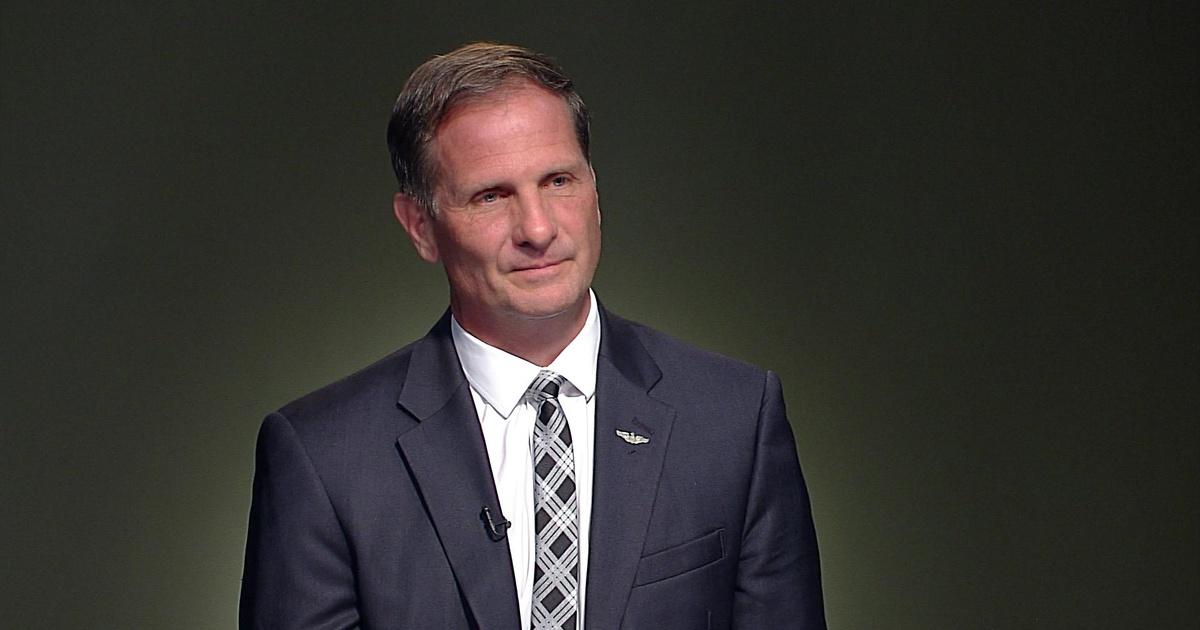

with privacy and security protections. For now, Google doesn’t have an equivalent feature for Android. “At the end of the day, the most important thing is being able to communicate with

friends and family,” ALS advocate Philip Green of the nonprofit Team Gleason organization said in a statement released by Apple. “If you can tell them you love them in a voice that sounds

like you, it makes all the difference in the world.” Apple won’t reveal its plans, but similar technology could be leveraged someday to preserve grandparents’ or other relatives’ voices,

left behind for a younger generation after they’re gone. THE IDEA OF VOICE CLONING IS UNSETTLING TO SOME For all of its promise as a technology, voice cloning is understandably worrisome,

especially as leaps in generative artificial intelligence produce convincing fake voices. Readily available software tools make it relatively easy to mimic another person’s voice in minutes,

often through capturing audio recordings from podcasts, social media and the web. The creepy part: Scammers are already exploiting such tools. You may have read about incidents where

malicious impostors pose behind a loved one’s cloned voice to dupe victims into surrendering personally sensitive information or cash. The criminals can use as little as three or four

seconds of a clip to replicate your voice, says James E. Lee, chief operating officer of the San Diego-based nonprofit Identity Theft Resource Center. “Identity criminals don’t need it to be

perfect,” he says. “They just need something that’s close enough because … the most common thing we see is some sort of relationship scam.” Lee points to another potentially significant

risk: As organizations turn to voice to authenticate an account holder, someone with a good enough clone of a person’s voice could access their account. Politicians have reasons to be

concerned as well. Cloned voices can be used to spread misinformation and deceive voters, similar to the way that deepfake pictures and videos can mislead the public. And AI will make fakes

even harder to detect. Faux voices are being employed in other ways that, at the very least, spur debate about ethics and the proper use of the technology. It conjures up memories of the

1970s’ “Is it live or is it Memorex?” commercials.