- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

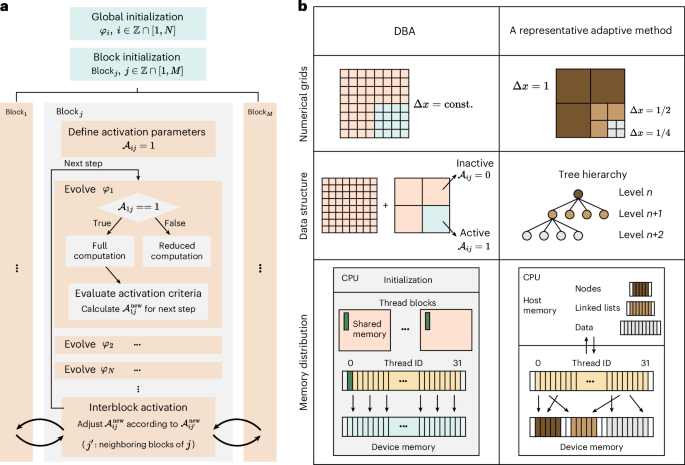

ABSTRACT Efficient utilization of massively parallel computing resources is crucial for advancing scientific understanding through complex simulations. However, existing adaptive methods

often face challenges in implementation complexity and scalability on modern parallel hardware. Here we present dynamic block activation (DBA), an acceleration framework that can be applied

to a broad range of continuum simulations by strategically allocating resources on the basis of the dynamic features of the physical model. By exploiting the hierarchical structure of

parallel hardware and dynamically activating and deactivating computation blocks, DBA optimizes performance while maintaining accuracy. We demonstrate DBA’s effectiveness through solving

representative models spanning multiple scientific fields, including materials science, biophysics and fluid dynamics, achieving 216–816 central processing unit core-equivalent speedups on a

single graphics processing unit (GPU), up to fivefold acceleration compared with highly optimized GPU code and nearly perfect scalability up to 32 GPUs. By addressing common challenges,

such as divergent memory access, and reducing programming burden, DBA offers a promising approach to fully leverage massively parallel systems across multiple scientific computing domains.

Access through your institution Buy or subscribe This is a preview of subscription content, access via your institution ACCESS OPTIONS Access through your institution Access Nature and 54

other Nature Portfolio journals Get Nature+, our best-value online-access subscription $29.99 / 30 days cancel any time Learn more Subscribe to this journal Receive 12 digital issues and

online access to articles $99.00 per year only $8.25 per issue Learn more Buy this article * Purchase on SpringerLink * Instant access to full article PDF Buy now Prices may be subject to

local taxes which are calculated during checkout ADDITIONAL ACCESS OPTIONS: * Log in * Learn about institutional subscriptions * Read our FAQs * Contact customer support SIMILAR CONTENT

BEING VIEWED BY OTHERS KOHN–SHAM TIME-DEPENDENT DENSITY FUNCTIONAL THEORY WITH TAMM–DANCOFF APPROXIMATION ON MASSIVELY PARALLEL GPUS Article Open access 26 May 2023 SHIFTING SANDS OF

HARDWARE AND SOFTWARE IN EXASCALE QUANTUM MECHANICAL SIMULATIONS Article 25 April 2025 THE CO-EVOLUTION OF COMPUTATIONAL PHYSICS AND HIGH-PERFORMANCE COMPUTING Article 23 August 2024 DATA

AVAILABILITY All of the experiments in this Resource are based on simulations, and there are no input data. Source data are provided with this paper. CODE AVAILABILITY The code that supports

the results within this Resource is available via GitHub at https://github.com/zhangruoyao68/DBA and via Zenodo at https://doi.org/10.5281/zenodo.14868458 (ref. 66). REFERENCES * Kirk, D.

B. & Hwu, W.-M. W. _Programming Massively Parallel Processors_ 3rd edn (Morgan Kaufmann, 2016). Google Scholar * Wang, Q., Ihme, M., Chen, Y.-F. & Anderson, J. A TensorFlow

simulation framework for scientific computing of fluid flows on tensor processing units. _Comput. Phys. Commun._ 274, 108292 (2022). Article MathSciNet Google Scholar * Castro, M. D.,

Vilariño, D. L., Torres, Y. & Llanos, D. R. The role of field-programmable gate arrays in the acceleration of modern high-performance computing workloads. _Computer_ 57, 66–76 (2024).

Article Google Scholar * Steinkraus, D., Buck, I. & Simard, P. Y. Using GPUs for machine learning algorithms. In _Eighth International Conference on Document Analysis and Recognition

(ICDAR’05)_ 2, 1115–1120 (IEEE, 2005). * Fung. J. Computer vision on the GPU. In _GPU Gems 2: Programming Techniques for High-Performance Graphics and General Purpose Computation_ 1st edn

(eds Pharr, M. et al.) Chap. 40 (Addison-Wesley, 2005.) * Götz, A. W., Wölfle, T. & Walker, R. C. Quantum chemistry on graphics processing units. In _Annual Reports in Computational

Chemistry_ Vol. 6 (ed Wheeler, R. A.) Chap. 2 (Elsevier, 2010). * Anderson, J. A., Glaser, J. & Glotzer, S. C. HOOMD-blue: A python package for high-performance molecular dynamics and

hard particle monte carlo simulations. _Comput. Mater. Sci._ 173, 109363 (2020). Article Google Scholar * Phillips, J. C. et al. Scalable molecular dynamics on CPU and GPU architectures

with NAMD. _J. Chem. Phys._ 153, 044130 (2020). Article Google Scholar * Abramson, J. et al. Accurate structure prediction of biomolecular interactions with AlphaFold 3. _Nature_ 630,

493–500 (2024). Article Google Scholar * Niemeyer, K. E. & Sung, C.-J. Recent progress and challenges in exploiting graphics processors in computational fluid dynamics. _J.

Supercomput._ 67, 528–564 (2014). Article Google Scholar * Michalakes, J. & Vachharajani, M. GPU acceleration of numerical weather prediction. In _Proc. IEEE International Symposium on

Parallel and Distributed Processing_ 1–7 (IEEE, 2008). * Eklund, A., Dufort, P., Forsberg, D. & LaConte, S. M. Medical image processing on the GPU—past, present and future. _Med. Image

Anal._ 17, 1073–1094 (2013). Article Google Scholar * Berger, M. J. & Oliger, J. Adaptive mesh refinement for hyperbolic partial differential equations. _J. Comput. Phys._ 53, 484–512

(1984). Article MathSciNet Google Scholar * Berger, M. J. & Colella, P. Local adaptive mesh refinement for shock hydrodynamics. _J. Comput. Phys._ 82, 64–84 (1989). Article Google

Scholar * Teunissen, J. & Ebert, U. Afivo: a framework for quadtree/octree AMR with shared-memory parallelization and geometric multigrid methods. _Comput. Phys. Commun._ 233, 156–166

(2018). Article Google Scholar * Rollier, M., Zielinski, K. M. C., Daly, A. J., Bruno, O. M. & Baetens, J. M. A comprehensive taxonomy of cellular automata. _Commun. Nonlinear Sci.

Numer. Simul._ 140, 108362 (2025). Article MathSciNet Google Scholar * Provatas, N., Goldenfeld, N. & Dantzig, J. Adaptive mesh refinement computation of solidification

microstructures using dynamic data structures. _J. Comput. Phys._ 148, 265–290 (1999). Article MathSciNet Google Scholar * Gaston, D., Newman, C., Hansen, G. & Lebrun-Grandié, D.

MOOSE: a parallel computational framework for coupled systems of nonlinear equations. _Nucl. Eng. Des._ 239, 1768–1778 (2009). Article Google Scholar * Greenwood, M. et al. Quantitative 3D

phase field modelling of solidification using next-generation adaptive mesh refinement. _Comput. Mater. Sci._ 142, 153–171 (2018). Article Google Scholar * DeWitt, S., Rudraraju, S.,

Montiel, D., Andrews, W. B. & Thornton, K. PRISMS-PF: a general framework for phase-field modeling with a matrix-free finite element method. _npj Comput. Mater._ 6, 29 (2020). Article

Google Scholar * Popinet, S. An accurate adaptive solver for surface-tension-driven interfacial flows. _J. Comput. Phys._ 228, 5838–5866 (2009). Article MathSciNet Google Scholar *

Zhang, W. et al. AMReX: a framework for block-structured adaptive mesh refinement. _J. Open Source Softw._ 4, 1370 (2019). Article Google Scholar * Teyssier, R. Cosmological hydrodynamics

with adaptive mesh refinement—a new high resolution code called RAMSES. _Astron. Astrophys._ 385, 337–364 (2002). Article Google Scholar * Bryan, G. L. et al. ENZO: an adaptive mesh

refinement code for astrophysics. _Astrophys. J. Suppl. Ser._ 211, 19 (2014). Article Google Scholar * Stone, J. M., Tomida, K., White, C. J. & Felker, K. G. The Athena++ adaptive mesh

refinement framework: design and magnetohydrodynamic solvers. _Astrophys. J. Suppl. Ser._ 249, 4 (2020). * Zhang, W., Myers, A., Gott, K., Almgren, A. & Bell, J. AMReX: block-structured

adaptive mesh refinement for multiphysics applications. _Int. J. High Perform. Comput. Appl._ 35, 508–526 (2021). Article Google Scholar * Schive, H.-Y. et al. gamer-2: a GPU-accelerated

adaptive mesh refinement code—accuracy, performance, and scalability. _Mon. Not. R. Astron. Soc._ 481, 4815–4840 (2018). Article Google Scholar * Wang, P., Abel, T. & Kaehler, R.

Adaptive mesh fluid simulations on GPU. _New Astron._ 15, 581–589 (2010). Article Google Scholar * Giuliani, A. & Krivodonova, L. Adaptive mesh refinement on graphics processing units

for applications in gas dynamics. _J. Comput. Phys._ 381, 67–90 (2019). Article MathSciNet Google Scholar * Liu, Z., Tian, F.-B. & Feng, X. An efficient geometry-adaptive mesh

refinement framework and its application in the immersed boundary lattice Boltzmann method. _Comput. Methods Appl. Mech. Eng._ 392, 114662 (2022). Article MathSciNet Google Scholar *

Farooqi, M. N. et al. Asynchronous AMR on multi-GPUs. In _Lecture Notes in Computer Science_ Vol. 11887 (eds Weiland, M. et al.) 113–123 (Springer, 2019). * Beckingsale, D., Gaudin, W.,

Herdman, A. & Jarvis, S. Resident block-structured adaptive mesh refinement on thousands of graphics processing units. In _Proc. 44th International Conference on Parallel Processing_

61–70 (IEEE, 2015). * Wang, J. & Yalamanchili, S. Characterization and analysis of dynamic parallelism in unstructured GPU applications. In _Proc. IEEE International Symposium on

Workload Characterization_ 51–60 (IEEE, 2014). * Hohenberg, P. C. & Halperin, B. I. Theory of dynamic critical phenomena. _Rev. Mod. Phys._ 49, 435–479 (1977). Article Google Scholar *

Kobayashi, R. Modeling and numerical simulations of dendritic crystal growth. _Physica D_ 63, 410–423 (1993). Article Google Scholar * Steinbach, I. Phase-field models in materials

science. _Model. Simul. Mat. Sci. Eng._ 17, 073001 (2009). Article Google Scholar * Francois, M. M. et al. Modeling of additive manufacturing processes for metals: Challenges and

opportunities. _Curr. Opin. Solid State Mater. Sci._ 21, 198–206 (2017). Article Google Scholar * Berry, J. et al. Toward multiscale simulations of tailored microstructure formation in

metal additive manufacturing. _Mater. Today_ 51, 65–86 (2021). Article Google Scholar * Allen, S. M. & Cahn, J. W. Ground state structures in ordered binary alloys with second neighbor

interactions. _Acta Metall._ 20, 423–433 (1972). Article Google Scholar * Mullins, W. W. & Sekerka, R. F. Stability of a planar interface during solidification of a dilute binary

alloy. _J. Appl. Phys._ 35, 444–451 (1964). Article Google Scholar * Plapp, M. & Karma, A. Multiscale random-walk algorithm for simulating interfacial pattern formation. _Phys. Rev.

Lett._ 84, 1740–1743 (2000). Article Google Scholar * Zhang, R., Mao, S. & Haataja, M. P. Chemically reactive and aging macromolecular mixtures. II. Phase separation and coarsening.

_J. Chem. Phys._ 161, 184903 (2024). Article Google Scholar * Brangwynne, C. P. et al. Germline P granules are liquid droplets that localize by controlled dissolution/condensation.

_Science_ 324, 1729–1732 (2009). Article Google Scholar * Hyman, A. A., Weber, C. A. & Jülicher, F. Liquid–liquid phase separation in biology. _Annu. Rev. Cell Dev. Biol._ 30, 39–58

(2014). Article Google Scholar * Berry, J., Brangwynne, C. P. & Haataja, M. Physical principles of intracellular organization via active and passive phase transitions. _Rep. Prog.

Phys._ 81, 046601 (2018). Article Google Scholar * Mao, S., Kuldinow, D., Haataja, M. P. & Košmrlj, A. Phase behavior and morphology of multicomponent liquid mixtures. _Soft Matter_

15, 1297–1311 (2019). Article Google Scholar * Cahn, J. W. & Hilliard, J. E. Free energy of a nonuniform system. III. nucleation in a two-component incompressible fluid. _J. Chem.

Phys._ 31, 688–699 (1959). Article Google Scholar * Lifshitz, I. M. & Slyozov, V. V. The kinetics of precipitation from supersaturated solid solutions. _J. Phys. Chem. Solids_ 19,

35–50 (1961). Article Google Scholar * Wagner, C. Theorie der Alterung von Niederschlägen durch Umlösen (Ostwald Reifung). _Z. Elektrochem. Ber. Bunsenges. Phys. Chem._ 65, 581–591 (1961).

Google Scholar * Helmholtz XLIII. on discontinuous movements of fluids. _Lond. Edinb. Dublin Philos. Mag. J. Sci._ 36, 337–346 (1868). Article Google Scholar * Toro, E. F. Riemann

Solvers and Numerical Methods for Fluid Dynamics. 3rd edn, Springer, (2009). Book Google Scholar * McNally, C. P., Lyra, W. & Passy, J.-C. A well-posed Kelvin–Helmholtz instability

test and comparison. _Astrophys. J. Suppl. Ser._ 201, 18 (2012). Article Google Scholar * Foullon, C., Verwichte, E., Nakariakov, V. M., Nykyri, K. & Farrugia, C. J. Magnetic

Kelvin–Helmholtz instability at the Sun. _Astrophys. J. Lett._ 729, L8 (2011). Article Google Scholar * Smyth, W. & Moum, J. Ocean mixing by Kelvin–Helmholtz instability.

_Oceanography_ 25, 140–149 (2012). Article Google Scholar * Rusanov, V. V. The calculation of the interaction of non-stationary shock waves and obstacles. _USSR Comput. Math. Math. Phys._

1, 304–320 (1962). Article Google Scholar * Burau, H. et al. PIConGPU: a fully relativistic particle-in-cell code for a GPU cluster. _IEEE Trans. Plasma Sci. IEEE Nucl. Plasma Sci. Soc._

38, 2831–2839 (2010). Article Google Scholar * Crespo, A. C., Dominguez, J. M., Barreiro, A., Gómez-Gesteira, M. & Rogers, B. D. GPUs, a new tool of acceleration in CFD: efficiency and

reliability on smoothed particle hydrodynamics methods. _PLoS ONE_ 6, e20685 (2011). Article Google Scholar * Montessori, A. et al. Thread-safe lattice boltzmann for high-performance

computing on GPUs. _J. Comput. Sci._ 74, 102165 (2023). Article Google Scholar * Teyssier, R., Chapon, D. & Bournaud, F. The driving mechanism of starbursts in galaxy mergers.

_Astrophys. J. Lett._ 720, L149–L154 (2010). Article Google Scholar * Shaw, D. E. et al. Anton 3. In _Proc. International Conference for High Performance Computing, Networking, Storage and

Analysis_ 1–11 (ACM, 2021). * Mudigere, D. et al. Software–hardware co-design for fast and scalable training of deep learning recommendation models. In _Proc. 49th Annual International

Symposium on Computer Architecture_ 993–1011 (ACM, 2022). * Cong, J. et al. FPGA HLS today: successes, challenges, and opportunities. _ACM Trans. Reconfigurable Technol. Syst._ 15, 1–42

(2022). Article Google Scholar * Dally, W. J., Turakhia, Y. & Han, S. Domain-specific hardware accelerators. _Commun. ACM_ 63, 48–57 (2020). Article Google Scholar * Rocki, K. et al.

Fast stencil-code computation on a wafer-scale processor. In _SC20: International Conference for High Performance Computing, Networking, Storage and Analysis_ Vol. 58 1–14 (IEEE, 2020). *

Watanabe, S. & Aoki, T. Large-scale flow simulations using lattice boltzmann method with AMR following free-surface on multiple GPUs. _Comput. Phys. Commun._ 264, 107871 (2021). Article

MathSciNet Google Scholar * Zhang, R. & Xia, Y. Source code for a dynamic block activation framework for continuum models. _Zenodo_ https://doi.org/10.5281/zenodo.14868458 (2025).

Download references ACKNOWLEDGEMENTS R.Z. was supported by the National Science Foundation (NSF) Materials Research Science and Engineering Center Program through the Princeton Center for

Complex Materials (PCCM) (grant no. DMR-2011750). Y.X. was supported by the National Natural Science Foundation of China (grant no. 12204162). Useful discussions with M. P. Haataja, R.

Teyssier, S. Cohen, J. Lalmansingh and Q. Cai are gratefully acknowledged. The simulations presented in this Resource were performed on computational resources managed and supported by

Princeton Research Computing, a consortium of groups including the Princeton Institute for Computational Science and Engineering (PICSciE) and Research Computing at Princeton University.

AUTHOR INFORMATION AUTHORS AND AFFILIATIONS * Department of Mechanical and Aerospace Engineering, Princeton University, Princeton, NJ, USA Ruoyao Zhang * College of Materials Science and

Engineering, Hunan University, Changsha, People’s Republic of China Yang Xia Authors * Ruoyao Zhang View author publications You can also search for this author inPubMed Google Scholar *

Yang Xia View author publications You can also search for this author inPubMed Google Scholar CONTRIBUTIONS R.Z. and Y.X. conceptualized the study, designed the computational framework,

implemented the code, analyzed results, visualized simulations and drafted the paper. CORRESPONDING AUTHOR Correspondence to Yang Xia. ETHICS DECLARATIONS COMPETING INTERESTS The authors

declare no competing interests. PEER REVIEW PEER REVIEW INFORMATION _Nature Computational Science_ thanks Cody Permann, Tatu Pinomaa, Nicolò Scapin and the other, anonymous, reviewer(s) for

their contribution to the peer review of this work. Primary Handling Editor: Fernando Chirigati, in collaboration with the _Nature Computational Science_ team. ADDITIONAL INFORMATION

PUBLISHER’S NOTE Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. SUPPLEMENTARY INFORMATION SUPPLEMENTARY INFORMATION

Supplementary Figs. 1 and 2, Supplementary model descriptions and Supplementary Tables 1 and 2. SOURCE DATA SOURCE DATA FIG. 3 Excel file for the source data used in Fig. 3. SOURCE DATA FIG.

4 Excel file for the source data used in Fig. 4. SOURCE DATA FIG. 5 Excel file for the source data used in Fig. 5. RIGHTS AND PERMISSIONS Springer Nature or its licensor (e.g. a society or

other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of

this article is solely governed by the terms of such publishing agreement and applicable law. Reprints and permissions ABOUT THIS ARTICLE CITE THIS ARTICLE Zhang, R., Xia, Y. A dynamic block

activation framework for continuum models. _Nat Comput Sci_ 5, 345–354 (2025). https://doi.org/10.1038/s43588-025-00780-2 Download citation * Received: 30 August 2024 * Accepted: 19

February 2025 * Published: 17 March 2025 * Issue Date: April 2025 * DOI: https://doi.org/10.1038/s43588-025-00780-2 SHARE THIS ARTICLE Anyone you share the following link with will be able

to read this content: Get shareable link Sorry, a shareable link is not currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing

initiative

![[withdrawn] accessing an advanced learner loans facility with the sfa](https://www.gov.uk/assets/static/govuk-opengraph-image-03837e1cec82f217cf32514635a13c879b8c400ae3b1c207c5744411658c7635.png)