- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

ABSTRACT Sepsis, septic shock, and cardiogenic shock are life-threatening conditions associated with high mortality rates, but differentiating them is complex because they share certain

symptoms. Using the Medical Information Mart for Intensive Care (MIMIC)-III database and artificial intelligence (AI), we aimed to increase diagnostic precision, focusing on Bayesian network

classifiers (BNCs) and comparing them with other AI methods. Data from 5970 adults, including 950 patients with cardiogenic shock, 1946 patients with septic shock, and 3074 patients with

sepsis, were extracted for this study. Of the original 51 variables included in the data records, 12 were selected for constructing the predictive model. The data were divided into training

and validation sets at an 80:20 ratio, and the performance of the BNCs was evaluated and compared with that of other AI models, such as the one rule classifier (OneR), classification and

regression tree (CART), and an artificial neural network (ANN), in terms of accuracy, sensitivity, specificity, precision, and F1-score. The BNCs exhibited an accuracy of 87.6% to 91.5%. The

CART model demonstrated a notable 91.6% accuracy when only three decision levels were used, whereas the intricate ANN model reached 90.5% accuracy. Both the BNCs and the CART model allowed

clear interpretation of the predictions. BNCs have the potential to be valuable tools in diagnostic tasks, with an accuracy, sensitivity, and precision comparable, in some cases, to those of

ANNs while demonstrating superior interpretability. SIMILAR CONTENT BEING VIEWED BY OTHERS ARTIFICIAL INTELLIGENCE BASED MULTISPECIALTY MORTALITY PREDICTION MODELS FOR SEPTIC SHOCK IN A

MULTICENTER RETROSPECTIVE STUDY Article Open access 28 April 2025 DEVELOPMENT OF A MACHINE LEARNING-BASED PREDICTION MODEL FOR SEPSIS-ASSOCIATED DELIRIUM IN THE INTENSIVE CARE UNIT Article

Open access 04 August 2023 EXTERNAL VALIDATION OF AN ARTIFICIAL INTELLIGENCE MODEL USING CLINICAL VARIABLES, INCLUDING ICD-10 CODES, FOR PREDICTING IN-HOSPITAL MORTALITY AMONG TRAUMA

PATIENTS: A MULTICENTER RETROSPECTIVE COHORT STUDY Article Open access 07 January 2025 INTRODUCTION Sepsis, septic shock, and cardiogenic shock are critical medical conditions characterised

by life-threatening organ dysfunction and high mortality rates. Distinguishing between these syndromes can be challenging, however, owing to their shared pathophysiological features. Sepsis

is defined as organ dysfunction resulting from a dysregulated host response to infection and can be detected by an acute increase in the total Sequential Organ Failure Assessment (SOFA)

score by ≥ 2 points due to infection. Septic shock is identified by persistent hypotension requiring vasopressors to maintain a mean arterial pressure ≥ 65 mm Hg and a serum lactate level

> 2.0 mmol/l despite adequate volume administration1,2. Cardiogenic shock, on the other hand, is diagnosed when patients exhibit a systolic blood pressure < 90 mmHg for ≥ 30 min or

require pharmacologic/mechanical circulatory support to maintain adequate blood pressure, accompanied by clinical signs of pulmonary congestion, pulmonary oedema, and impaired end-organ

perfusion3,4. Distinguishing between septic and cardiogenic shock is particularly challenging because of the overlapping pathophysiological mechanisms of both conditions, while septic shock

often involves a complex interplay of distributive shock, hypovolemia, impaired cardiac function, mitochondrial dysfunction, and coagulopathies5,6. Furthermore, the incidence of septic (31%)

and cardiogenic shock (28%) are nearly identical7, and both represent life-threatening conditions requiring rapid diagnosis and treatment to prevent further deterioration, the development

of serious consequences, or death. In recent years, the integration of artificial intelligence (AI) technologies, driven by a surge in big data, has garnered increasing attention across

various sectors, including healthcare. AI-based applications hold immense potential to revolutionise medical research and clinical practice, consistently demonstrating the capacity to

outperform human counterparts in certain instances8,9. Despite these promising achievements, the widespread implementation of AI in healthcare faces considerable challenges, one of the

foremost of which is the inherent lack of transparency of complex AI algorithms. Deep learning methods, especially artificial neural networks (ANNs), often operate as a "black

box," rendering the decision-making process inscribable and eroding trust in AI-derived solutions10. A potential remedy for this interpretability issue is the use of Bayesian network

classifiers (BNCs) as decision support tools. BNCs offer a unique advantage by graphically depicting direct and indirect variable dependencies, facilitating the understanding of causal

relationships among variables in disease prediction. This retrospective study aimed to fill this crucial gap by developing a reliable diagnostic assistance system. Leveraging patient data

from the Medical Information Mart for Intensive Care (MIMIC) III database, we focused on the intensive care conditions of sepsis, septic shock, and cardiogenic shock and employed various

BNCs for discriminating among them. These classifiers were subsequently benchmarked against conventional approaches such as the One Rule classifier (OneR), the Classification and Regression

Trees (CART) algorithm, and an artificial neural networks (ANN), paving the way for a comprehensive evaluation of model performance, interpretability, and potential to enhance clinical

decision-making in critical care scenarios. METHODS DATA SOURCE AND SELECTION Given the difficulties of obtaining primary medical data, the MIMIC-III database was utilised in this study.

This extensive, single-centre database consists of deidentified clinical data from patients admitted to critical care units of the Beth Israel Deaconess Medical Center in Boston,

Massachusetts, between 2001 and 201211. The data are widely accessible to researchers under a data use agreement, so the study was exempt from the need for specific ethical review.

Nevertheless, we adhered to fundamental ethical principles and ensured the validity and fairness of the study process. The database contains data from 53,423 adult hospital admissions (aged

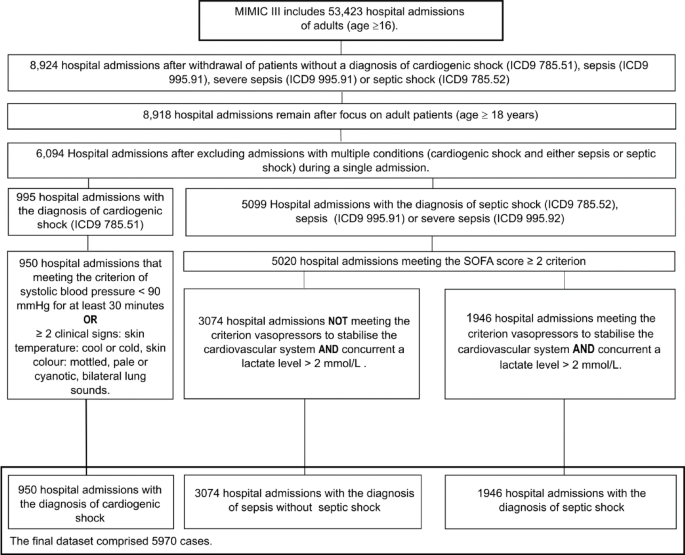

16 years or above)11. Among these patients, this study focused on patients aged ≥ 18 years. The inclusion criteria included admission with a diagnosis of sepsis, septic shock, or cardiogenic

shock according to the International Classification of Diseases, 9th Revision (ICD-9) diagnosis table (codes 785.51, 785.52, 995.91, and 995.92). Due to the constraints of the data in the

MIMIC-III collected prior to 2012, sepsis and septic shock admissions were re-evaluated on the basis of criteria from the 2016 and 2021 Surviving Sepsis Campaign guidelines1,2 and

cardiogenic shock guidelines3,4. A two-step reclassification process was conducted to differentiate between the sepsis and septic shock groups. Initially, patients diagnosed with either

sepsis, severe sepsis, or septic shock who had a SOFA score ≥ 2 were classified as having sepsis. Patients who did not fulfill this criterion were excluded from the data set. Within this

group, patients were then reclassified as septic shock cases if they also met the diagnostic criteria of vasopressor-dependent circulatory failure and hyperlactatemia (plasma lactate level

> 2 mmol/L). After being reallocated into the septic shock group, those cases were subsequently removed from the sepsis group. We used MIT-LCP/mimic-code to create the SOFA score table12.

The criteria for the cardiogenic shock group included a low systolic blood pressure (< 90 mmHg) for a period of ≥ 30 min or the presence of two of three clinical signs from among the

following: bilateral lung sounds, cool/cold skin temperature and pale/mottled/cyanotic skin colour; data on admissions for patients who did not meet these criteria were excluded. Patients

whose data did not adhere to the previously mentioned conditions were either reclassified or entirely excluded from this study. To prevent duplicate diagnoses from affecting the learning and

assessment processes of the models, patients diagnosed with multiple conditions (cardiogenic shock and either sepsis or septic shock) during a single admission were also excluded. The data

adjustment process is shown in Fig. 1. DATA PROCESSING The final dataset comprised 5,970 distinct hospital admissions, each considered a unique observation. To enhance the dataset, the

Elixhauser et al.13 comorbidity index was extracted from the MIMIC III diagnostic table, and selected categories were incorporated as variables in the dataset. This classification, along

with the index value, has been demonstrated to be highly important in predicting prognoses and mortality for a range of diseases and injuries. Compared with detailed ICD9 codes, it allows

better organisation of the corresponding data. The data from Quan et al.14, constructed via the MIT-LCP/mimic-code12 methodology, were employed to generate predictor variables for this

research. In addition, specific predictor variables for conditions such as cardiac arrhythmias, myocardial infarction and infections were formulated by combining different ICD9 codes into

diagnostic groups. We also introduced predictor variables for age, sex, SOFA score ≥ 2, vasopressor dependence, lactate level > 2 mmol/l, and other clinical signs and indicators

(Supplementary Table 1 online offers an overview of the created variables). FEATURE SELECTION A three-phase feature selection process was employed after cleaning the dataset for

restructuring and adaptation to the new guidelines. Initially, variables that were deemed irrelevant to disease diagnosis were excluded. In the second phase, features that did not provide

sufficient value and features exhibiting unsatisfactory importance due to low frequency or low variance between classes were discarded. The final phase involved evaluating the remaining

variables with the mutual information score (MIS), employing the median as the cut-off threshold over the arithmetic mean because of the enhanced resilience of this metric against outliers.

After the feature selection process, 12 of the 51 variables were included in the final dataset. Table 1 offers an in-depth overview of the included prediction variables. CLASSIFIERS EMPLOYED

To address the challenge of differentiating between the clinically similar conditions of sepsis, septic shock and cardiogenic shock, Bayesian network classifiers (BNCs) were employed. These

classifiers leverage probabilistic relationships among selected clinical parameters (e.g., vasopressor dependency, lactate levels, SOFA scores, and infection status) to calculate diagnostic

probabilities, thereby enabling nuanced differentiation among these clinically overlapping syndromes. Various BNCs were trained on the dataset and evaluated for their predictive power in

terms of accuracy, sensitivity, specificity, precision, F1-Score, and interpretability. To assess the usefulness of the BNCs, the results were compared with those of other commonly used

classifiers, such as a naive Bayes (NB) classifier, the One rule Classifier (OneR), a Classification and Regression Trees (CART)-based classifier, and a feed forward backpropagation

artificial neural network (ANN). The basic concepts, methods and relevant algorithms of the individual classifiers are briefly summarised below. NAIVE BAYES (NB) A probabilistic algorithm

based on Bayes’ theorem that operates under the assumption that all features are independent of one another. TREE AUGMENTED NAIVE BAYES (TAN) An enhancement of the NB, the TAN algorithm

introduces feature dependencies via a tree structure, enhancing prediction accuracy over its naive counterpart. It employs methods such as the Chow–Lui algorithm (Akaike information

criterion (AIC), Bayesian information criterion (BIC), and log-likelihood (LOG)) for structure optimisation. SEMI-NAIVE BAYES CLASSIFIER (SNBC) SNBC is an adaptation of NB that considers

feature interdependencies and eliminates, selects or joins features to improve classification accuracy. It uses algorithms such as backward sequential elimination and joining (BSEJ) and

forward sequential selection and joining (FSSJ). BENCHMARKING We used three alternative techniques/algorithms as benchmarks to evaluate our models. ONE RULE CLASSIFIER (ONER) This one-rule

algorithm classifies data on the basis of a single attribute. Despite the simplicity of the resulting model, OneR has been shown to deliver good results and thus serves an excellent

benchmark15. CLASSIFICATION AND REGRESSION TREE (CART) A decision tree trained through recursive partitioning. The tree’s structure represents the course of the subsequent classification

process, determined by the iterative division of the data into subgroups16. FEED-FORWARD BACKPROPAGATION NEURAL NETWORK (ANN) This algorithm accurately represents the biological nervous

system and is designed to mimic the communication between neurons. The feedforward aspect describes the direction of signal transmission, whereas the backpropagation aspect describes the

neural network’s learning process, wherein the loss function is minimised by adjusting the individual parameters through weights and biases. EVALUATION METRICS AND PROCEDURES All classifiers

were trained, validated, and tested. First, the data were randomly divided into training and test datasets at a ratio of 80:20. Performance metrics, including accuracy, sensitivity/recall,

specificity, precision, and F1-score, were derived from the resulting confusion matrices. Specifically, the true negative (TN), true positive (TP), false negative (FN), false positive (FP)

and true negative (TN) values presented in the confusion matrix were used to calculate the following metrics for qualifying the classifiers: $$Accuracy = \frac{TP + TN}{{TP + TN + FP +

FN}}$$ $$Sensitivity/{{Re}}call = \frac{TP}{{TP + FN}}$$ $$Specifity = \frac{TN}{{TN + FP}}$$ $${{Precision}} = \frac{{TP}}{{TP + FP}}$$ $$F1-Score = \frac{{2. Precision \cdot {{Re}}call}}{{

Precision + {{Re}}call}}$$ These metrics are class-specific and must therefore be calculated three times for this multiclass problem. Furthermore, the area under the curve (AUC) was

calculated for the multiclass classification following the methods of Hand and Till17. We performed tenfold cross-validation using accuracy and Cohen’s kappa as internal validation metrics

to assess the accuracy and reliability of the models independent of the choice of randomiser. Additionally, we attempted to address potential imbalances in the models through upsampling and

then compared the results with those obtained without upsampling. COMPUTATION AND VISUALISATION All computations and visualisations were performed with R Version 4.2.2, RStudio 2022.07.1

Build 554 and the packages bnclassify, caret, nnet, OneR, pRoc and rpart18,19,20,21,22,23. RESULTS This study explored the efficacy of Bayesian networks in addressing intricate

classification challenges within the field of medical diagnostics. Initially, various BNCs were compared, but the differences in their performance were negligible. The accuracy of the TAN

method ranged from 91.3 to 91.5%, whereas the SNBC model achieved an accuracy of 91.1% for the forwards sequences and 90.9% for the backwards sequences. Moreover, the NB method exhibited a

comparatively modest accuracy of 87.6% (Table 2). To further evaluate the outcomes of the Bayesian networks, the classifiers were benchmarked against established models (Table 2). Utilising

OneR as a preliminary benchmark revealed that vasopressor dependency was the paramount predictor, with 68.5% predictive power, followed by infection at 61.5% and lactate at 59.1%. These

findings are in accordance with the results of the MIS. Although these accuracy values were considerably lower than those of the BNCs, they provided preliminary insights into the

effectiveness of distinct predictors. Given the binary nature of the predictor variables, OneR can identify only two classes, which downgrades its utility from that of a benchmark model to

that of a tool for determining feature importance. In our evaluations, the CART model exhibited an accuracy of 91.6% through its rudimentary tree framework, employing only the predictors

vasopressor dependency, lactate and infection, the same ones that were most prominent in the OneR analysis. The BNCs were subsequently compared with the ANN. Despite numerous iterations

across different hidden layer architectures, the highest accuracy achieved by the ANN, obtained with a singular hidden layer and ten neurons, was 90.5%, whereas different multilayer ANNs

yielded accuracies between 85.0 and 88.0%. In the tenfold cross-validation, the TAN (BIC), TAN (AIC) and FSSJ models showed relatively low variance between quartiles in terms of accuracy and

Cohen’s kappa, indicating consistent performance across different samples, whereas the ANN showed significant performance degradation. The cross-validation process revealed that the TAN

models exhibited the best performance, with high accuracy and kappa values while exhibiting low variance. Nevertheless, all the models demonstrated a high degree of correlation, thereby

indicating the emergence of a common trend. The box plot diagram in Fig. 2 provides a comprehensive overview of the cross-validation results. All the employed models were assessed in terms

of their ability to accurately classify cardiogenic shock. While the sensitivities/recalls for sepsis and septic shock remained consistently above 90% across the models, those for

cardiogenic shock fluctuated between 77.3 and 83.5%. Upsampling was performed to balance the classes and investigate whether an imbalance within the training data may have been responsible

for this discrepancy. While this strategy increased cardiogenic shock sensitivity by an average of 4.5%, it compromised holistic model performance, as the overall accuracy decreased by an

average of 1.7%, and the sensitivities for septic shock and sepsis decreased by 2.9% and 2.8%, respectively. The models demonstrated a mean reduction in accuracy of 2.9% for the sepsis class

and 2.8% for the septic shock class, whereas that for the cardiogenic shock class decreased by 12.0% on average. Furthermore, the mean F1-scores of the models for the different classes

ranged between 0.006 and 0.044. DISCUSSION The urgency in diagnosing life-threatening conditions such as sepsis, septic shock, and cardiogenic shock, all of which have high mortality rates,

cannot be overstated. Our findings highlight the effectiveness of BNCs in facilitating prompt diagnoses of these ailments. To our knowledge, this is the first investigation of BNCs in the

differential diagnosis of sepsis, septic shock, and cardiogenic shock. Given the absence of directly analogous studies, we benchmarked our models against other AI algorithms. Prior

techniques for the early diagnosis of these conditions have employed clinical decision rules, logistic regression models, random forests, decision trees, and deep learning

techniques24,25,26,27,28. The latest research emphasises early detection before the disease can truly manifest, making direct comparisons with certain existing methods challenging, as our

focus was on immediate diagnosis24,25,26,27,28. Many AI-based diagnostic methods target a single condition (binary classification), whereas the models developed in our study perform

multiclass differentiation, further increasing the difficulty in directly comparing these methods. Challenging the conventional wisdom of ANNs as the AI gold standard, our BNCs, which are

inherently interpretable, exhibited comparable accuracy. Our findings suggest that BNCs are potent tools for intricate classification tasks in the field of medicine, demonstrating

substantial accuracy in multiclass predictions. Their inherent interpretability also makes them inherently appealing. As indicated above, the prevailing narrative holds that ANN and deep

learning methods are universal solutions, potentially overshadowing the utility of BNCs29, and the latest model is often assumed to perform best30. The findings of this study demonstrate

that BNCs are comparable to ANNs in terms of performance metrics and may even outperform them, thus challenging the prevailing assumption about the novelty and effectiveness of ANN models.

Our findings also call into question the prevailing hypothesis regarding a trade-off between accuracy and interpretability, instead suggesting that increased model complexity does not

necessarily equate to superior performance. The differences were only a few percentage points, however, and it is possible that the ANN could be optimised with additional training and

tuning. Notably, applying a straightforward hidden layer structure resulted in a notable increase in accuracy over more intricate structural configurations. The CART model, despite its

simplicity, outperformed some of the BNCs and the ANN. The rudimentary architecture of the NB model yielded suboptimal results, whereas the nuanced interconnections within the TAN methods

enhanced their accuracy. The SNBCs leveraged their ability to select features but were, unfortunately, not superior to the TANs in our study. Consistent with this notion, the BNCs in our

study demonstrated substantial decomposability, algorithmic transparency, and simulatability. The upsampling procedures we employed to increase the sensitivity/recall for the cardiogenic

shock class highlighted the trade-off between this metric and precision in multiclass approaches. While most of our models improved in predicting cardiogenic shock following upsampling, the

precision for this class significantly decreased. In contrast, the sensitivity/recall decreased for the other two classes, whereas the precision increased slightly. It is important that our

models be able to differentiate between the three classes with high accuracy; in this context, therefore, sensitivity/recall and precision represent essential metrics. The TAN AIC model

demonstrated a good balance between sensitivity/recall and precision without upsampling, whereas after upsampling, it demonstrated only a 2.1% greater sensitivity/recall and an 11.8% lower

precision for the cardiogenic shock category. This is further evidenced by the decrease in the F1-score, by 0.052 points. Moreover, without upsampling, commendable sensitivity/recall and

precision values were already demonstrated for the other two classes with this model. Consequently, the TAN (AIC) model was identified as the preferred option. A shared limitation across the

models was the difficulty in classifying cardiogenic shock. The cardiogenic shock is associated with a wide range of potential causes (e.g. myocardial infarction, post-myocardial syndrome,

valvular heart disease etc.)31, which significantly increases the heterogeneity within this cohort. Potential data or variable-related deficiencies might be another reason for this issue.

Critical insights from imaging, patient history, and clinical examinations, mainly presented as continuous text within the databases, were not explored. The promise of natural language

processing tools in refining such data warrants exploration. Our dataset exhibited an underrepresentation of myocardial infarction (60%), a critical predictor of cardiogenic shock, compared

with the frequency reported in previous studies on this condition (80%)3. Moreover, the incidence of myocardial infarctions during sepsis and septic shock admissions introduced a number of

uncertainties. Variables such as ‘vasopressor dependency’, ‘infection’ and ‘lactate’ were shown to be pivotal indicators for sepsis and septic shock, which aligns with contemporary sepsis

definitions and guidelines. However, the revision of outdated data may have influenced model learning, potentially skewing the predictive power of these indicators. The absence of temporal

contexts in our final dataset limited the integration of specific measured values, underscoring the need for temporally processable datasets for improving diagnostic accuracy, which should

be investigated in a follow-up study with more current data. Furthermore, because outdated data were employed in this study, prospective statements regarding the results in a clinical

setting cannot be made. Nevertheless, the retrospective outcomes are encouraging, suggesting that external validation may be associated with favourable results. Another limitation of the

study was that patients with concomitant conditions such as septic and cardiogenic shock were explicitly excluded to ensure clinically distinct phenotypes. Furthermore, no distinction was

made between patients with septic shock due to septic cardiomyopathy and those due to vasodilation (reduced vascular tone). While the models in this study displayed commendable performance

levels for a discrete classification, their diagnostic accuracy in individual cases decreases in proportion to pathophysiological complexity. This finding mirrors the clinical reality, where

the presence of overlapping pathophysiological profiles complicates the diagnosis32 and leaves a crucial diagnostic gap for the use of the models in a clinical context. Moreover, the

inherent heterogeneity of the study population presents a challenge to the generalisability of prediction models. Emerging evidence highlights the urgent need to explore sub-phenotypes to

improve clinical care33. Recent studies have shown that machine learning-based subphenotyping is highly relevant prognosis in critical care34,35,36,37,38 and validation of prediction

models39. Future work is needed to determine whether subphenotypes provide better model performance. In subsequent studies, the intention is to undertake external validation, enhance the

model, and execute a systematic evaluation of the model’s performance across sub-phenotypes. CONCLUSIONS This investigation aimed to design a BNC to aid in the diagnosis of sepsis, septic

shock, and cardiogenic shock, drawing data from the MIMIC-III database. Classifiers constructed from TAN algorithms demonstrated optimal performance, characterised by both reproducibility

and interpretability. Our findings highlight the efficacy of selecting BNCs for intricate classification tasks, such as diagnostic support systems. Notably, certain BNCs have the potential

to match the performance of or even outperform ANNs while ensuring consistent learning behaviours, commendable accuracy, and interpretability. Nevertheless, there is an obvious need for

ongoing improvements, particularly by integrating data with temporal relationships. DATA AVAILABILITY The data are widely accessible to researchers under a data use agreement. Researchers

seeking to use the database must formally request access. For details, see https://mimic.mit.edu/docs/gettingstarted/ or https://physionet.org/content/mimiciii/1.4/ ABBREVIATIONS * AIC:

Akaike information criterion * ANN: Feed forward backpropagation artificial neural network * AUC: Area under the curve * BIC: Bayesian information criterion * BNC: Bayesian network

classifier * BSEJ: Backward sequential elimination and joining * CART: Classification and regression tree * FN: False negative * FP: False positive * FSSJ: Forward sequential selection and

joining * LOG: LOG-likelihood * MIMIC: Medical Information Mart for Intensive Care * MIS: Mutual information score * MIT-LCP: Massachusetts Institute of Technology-Laboratory for

Computational Physiology * NB: Naive Bayes * OneR: One rule machine learning classification algorithm with enhancements * SNBC: Semi-Naïve Bayes classifier * TAN: Tree augmented Naive Bayes

* TN: True negative * TP: True positive REFERENCES * Singer, M. et al. The third international consensus definitions for sepsis and septic shock (Sepsis-3). _JAMA_ 315, 801–810 (2016).

Article CAS PubMed PubMed Central Google Scholar * Evans, L. et al. Surviving sepsis campaign: International guidelines for management of sepsis and septic shock 2021. _Intensive Care

Med._ 47, 1181–1247 (2021). Article PubMed PubMed Central Google Scholar * Vahdatpour, C., Collins, D. & Goldberg, S. Cardiogenic shock. _J. Am. Heart Assoc._ 8, e011991 (2019).

Article PubMed PubMed Central Google Scholar * Werdan, K. et al. Kurzversion der 2. Auflage der deutsch-österreichischen S3-Leitlinie infarkt-bedingter kardiogener schock—Diagnose,

monitoring und therapie. _Kardiologe_ 14, 364–395 (2020). Article Google Scholar * Standl, T. et al. The nomenclature, definition and distinction of types of shock. _Dtsch Arztebl Int._

115, 757–768 (2018). PubMed PubMed Central Google Scholar * Carbone, F., Liberale, L., Preda, A., Schindler, T. H. & Montecucco, F. Septic cardiomyopathy: From pathophysiology to the

clinical setting. _Cells_ 11, 2833 (2022). Article CAS PubMed PubMed Central Google Scholar * Bloom, J. E. et al. Incidence and outcomes of nontraumatic shock in adults using emergency

medical services in Victoria, Australia. _JAMA Netw Open_ 5, e2145179 (2022). Article PubMed PubMed Central Google Scholar * Davenport, T. & Kalakota, R. The potential for artificial

intelligence in healthcare. _Future Healthc. J._ 6, 94–98 (2019). Article PubMed PubMed Central Google Scholar * Yu, K. H., Beam, A. L. & Kohane, I. S. Artificial intelligence in

healthcare. _Nat. Biomed. Eng._ 2, 719–731 (2018). Article PubMed Google Scholar * Zihni, E. et al. Opening the black box of artificial intelligence for clinical decision support: A study

predicting stroke outcome. _PLoS ONE_ 15, e0231166 (2020). Article CAS PubMed PubMed Central Google Scholar * Johnson, A., Pollard, T. & Mark, R. MIMIC-III clinical database.

PhysioNet (2023). * Johnson, A., Pollard, T., Blundell, J., Gow, B., Erinhong, Paris, N., et al. _MIT-LCP/Mimic-Code: MIMIC Code v2.2.1_. Zenodo; (2022). * Elixhauser, A., Steiner, C.,

Harris, D. R. & Coffey, R. M. Comorbidity measures for use with administrative data. _Med. Care_ 36, 8–27 (1998). Article CAS PubMed Google Scholar * Quan, H. et al. Coding

algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. _Med. Care_ 43, 1130–1139 (2005). Article PubMed Google Scholar * Shaik, N. B., Pedapati, S. R., Taqvi,

S. A. A., Othman, A. R. & Dzubir, F. A. A. A feed-forward back propagation neural network approach to predict the life condition of crude oil pipeline. _Processes_ 8, 661 (2020). Article

Google Scholar * Breiman, L. _Classification and Regression Trees_ (Routledge, 1984). Google Scholar * Hand, D. J. & Till, R. J. A simple generalisation of the area under the ROC

curve for multiple class classification problems. _Mach. Learn._ 45, 171–186 (2001). Article Google Scholar * Mihaljevic, B., Bielza, C. & Larranaga, P. bnclassify: Learning Bayesian

network classifiers. _R J._ 10, 455 (2018). Article Google Scholar * Jouanne-Diedrich, H. V. OneR: one rule machine learning classification algorithm with enhancements (2017).

https://CRAN.R-project.org/package=OneR. Accessed 23 Feb 2024. * Venables, B. & Ripley, B. _Modern Applied Statistics with S_ (Springer, 2002). Book Google Scholar * Kuhn, M. caret:

Classification and regression training. 2022. https://CRAN.R-project.org/package=caret. Accessed 12 May 2024. * Robin, X. et al. pROC: An open-source package for R and S+ to analyze and

compare ROC curves. _BMC Bioinf._ https://doi.org/10.1186/1471-2105-12-77 (2011). Article Google Scholar * Therneau, T. & Atkinson, B. rpart: Recursive partitioning and regression

trees (2022). https://CRAN.R-project.org/package=rpart. Accessed 12 Feb 2024. * Wu, M., Du, X., Gu, R. & Wei, J. Artificial intelligence for clinical decision support in sepsis. _Front

Med._ 8, 665464 (2021). Article Google Scholar * Bai, Z. et al. Development of a machine learning model to predict the risk of late cardiogenic shock in patients with ST-segment elevation

myocardial infarction. _Ann. Transl. Med._ 9, 1162 (2021). Article CAS PubMed PubMed Central Google Scholar * Chang, Y. et al. Early prediction of cardiogenic shock using machine

learning. _Front Cardiovasc. Med._ 9, 862424 (2022). Article CAS PubMed PubMed Central Google Scholar * Hu, Y., Lui, A., Goldstein, M., Sudarshan, M., Tinsay, A., Tsui, C., et al. A

dynamic risk score for early prediction of cardiogenic shock using machine learning. arXiv https://doi.org/10.48550/arXiv.2303.12888 (2023). * Rahman, F. et al. Using machine learning for

early prediction of cardiogenic shock in patients with acute heart failure. _J. Soc. Cardiovasc. Angiogr. Interv._ 1, 100308 (2022). PubMed PubMed Central Google Scholar * Ahmed, M. &

Islam, A. K. M. N. Deep learning: Hope or hype. _Ann. Data Sci._ 7, 427–432 (2020). Article Google Scholar * Johansson, U., Sönströd, C., Norinder, U. & Boström, H. Trade-off between

accuracy and interpretability for predictive in silico modeling. _Future Med. Chem._ 3, 647–663 (2011). Article CAS PubMed Google Scholar * Ng, R. & Yeghiazarians, Y. Post myocardial

infarction cardiogenic shock: A review of current therapies. _J. Intensive Care Med._ 28, 151–165. https://doi.org/10.1177/0885066611411407 (2013). Article PubMed Google Scholar * Sato,

R., Hasegawa, D., Guo, S., Nuqali, A. E. & Moreno, J. E. P. Sepsis-induced cardiogenic shock: Controversies and evidence gaps in diagnosis and management. _J. Intensive Care_ 13, 1.

https://doi.org/10.1186/s40560-024-00770-y (2025). Article PubMed PubMed Central Google Scholar * Soussi, S. et al. Identifying biomarker-driven subphenotypes of cardiogenic shock:

Analysis of prospective cohorts and randomized controlled trials. _EClinicalMedicine_ 79, 103013. https://doi.org/10.1016/j.eclinm.2024.103013 (2025). Article PubMed Google Scholar *

Zweck, E. et al. Phenotyping cardiogenic shock. _J. Am. Heart Assoc._ 10, e020085. https://doi.org/10.1161/JAHA.120.020085 (2021). Article PubMed PubMed Central Google Scholar * Jentzer,

J. C. et al. Machine learning approaches for phenotyping in cardiogenic shock and critical illness: Part 2 of 2. _JACC Adv._ 1, 100126. https://doi.org/10.1016/j.jacadv.2022.100126 (2022).

Article PubMed PubMed Central Google Scholar * Hu, C., Li, Y., Wang, F. & Peng, Z. Application of machine learning for clinical subphenotype identification in sepsis. _Infect. Dis.

Ther._ 11, 1949–1964. https://doi.org/10.1007/s40121-022-00684-y (2022). Article PubMed PubMed Central Google Scholar * Seymour, C. W. et al. Derivation, validation, and potential

treatment implications of novel clinical phenotypes for sepsis. _JAMA_ 321, 2003–2017. https://doi.org/10.1001/jama.2019.5791 (2019). Article CAS PubMed PubMed Central Google Scholar *

Yang, J. et al. Identification of clinical subphenotypes of sepsis after laparoscopic surgery. _Laparosc. Endosc. Robot. Surg._ 7, 16–26. https://doi.org/10.1016/j.lers.2024.02.001 (2024).

Article Google Scholar * Subbaswamy, A. et al. A data-driven framework for identifying patient subgroups on which an AI/machine learning model may underperform. _NPJ Digit. Med._ 7, 334.

https://doi.org/10.1038/s41746-024-01275-6 (2024). Article PubMed PubMed Central Google Scholar Download references AUTHOR INFORMATION AUTHORS AND AFFILIATIONS * Department of

Anaesthesiology and Critical Care, Klinikum Aschaffenburg-Alzenau, Aschaffenburg, Germany Dirk Obmann & York A. Zausig * Department of Anaesthesiology, University of Regensburg,

Regensburg, Germany Dirk Obmann, Bernhard Graf & York A. Zausig * Faculty of Engineering, Competence Centre for Artificial Intelligence, TH Aschaffenburg (University of Applied

Sciences), Aschaffenburg, Germany Philipp Münch & Holger von Jouanne-Diedrich Authors * Dirk Obmann View author publications You can also search for this author inPubMed Google Scholar *

Philipp Münch View author publications You can also search for this author inPubMed Google Scholar * Bernhard Graf View author publications You can also search for this author inPubMed

Google Scholar * Holger von Jouanne-Diedrich View author publications You can also search for this author inPubMed Google Scholar * York A. Zausig View author publications You can also

search for this author inPubMed Google Scholar CONTRIBUTIONS HvJD and YZ originated the idea. DO and PM developed the following study objectives under the supervision of HvJD and YZ. DO and

PM performed the data preprocessing, programming, feature selection and evaluation of the results. DO was responsible for writing the paper. PM, HvJD, BG and YZ supported the editing of the

manuscript and added essential comments to the paper. All authors read and approved the final manuscript. CORRESPONDING AUTHOR Correspondence to Dirk Obmann. ETHICS DECLARATIONS COMPETING

INTERESTS The authors declare no competing interests. ETHICS APPROVAL AND CONSENT TO PARTICIPATE An inquiry was submitted to the Ethics Committee of the Bavarian Medical Association.

Following an internal review, no further consultation with the ethics committee was deemed necessary. The data are widely accessible to researchers under a data use agreement, so the study

was exempt from the need for specific ethical review. Nevertheless, we adhered to fundamental ethical principles and ensured the validity and fairness of the study process. ADDITIONAL

INFORMATION PUBLISHER’S NOTE Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. ELECTRONIC SUPPLEMENTARY MATERIAL Below is

the link to the electronic supplementary material. SUPPLEMENTARY MATERIAL 1 RIGHTS AND PERMISSIONS OPEN ACCESS This article is licensed under a Creative Commons

Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give

appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission

under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons

licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by

statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit

http://creativecommons.org/licenses/by-nc-nd/4.0/. Reprints and permissions ABOUT THIS ARTICLE CITE THIS ARTICLE Obmann, D., Münch, P., Graf, B. _et al._ Comparison of different AI systems

for diagnosing sepsis, septic shock, and cardiogenic shock: a retrospective study. _Sci Rep_ 15, 15850 (2025). https://doi.org/10.1038/s41598-025-00830-9 Download citation * Received: 27

December 2024 * Accepted: 30 April 2025 * Published: 06 May 2025 * DOI: https://doi.org/10.1038/s41598-025-00830-9 SHARE THIS ARTICLE Anyone you share the following link with will be able to

read this content: Get shareable link Sorry, a shareable link is not currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing

initiative KEYWORDS * Artificial intelligence * Ai * Decision support systems * Sepsis * Septic shock * Cardiogenic shock