- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

ABSTRACT This study investigated the influence of body motion on an echolocation task. We asked a group of blindfolded novice sighted participants to walk along a corridor, made with plastic

sound-reflecting panels. By self-generating mouth clicks, the participants attempted to understand some spatial properties of the corridor, i.e. a left turn, a right turn or a dead end.

They were asked to explore the corridor and stop whenever they were confident about the corridor shape. Their body motion was captured by a camera system and coded. Most participants were

able to accomplish the task with the percentage of correct guesses above the chance level. We found a mutual interaction between some kinematic variables that can lead to optimal

echolocation skills. These variables are head motion, accounting for spatial exploration, the motion stop-point of the person and the amount of correct guesses about the spatial structure.

The results confirmed that sighted people are able to use self-generated echoes to navigate in a complex environment. The inter-individual variability and the quality of echolocation tasks

seems to depend on how and how much the space is explored. SIMILAR CONTENT BEING VIEWED BY OTHERS CONTINUOUS PERIPERSONAL TRACKING ACCURACY IS LIMITED BY THE SPEED AND PHASE OF LOCOMOTION

Article Open access 08 September 2023 SERIAL DEPENDENCIES BETWEEN LOCOMOTION AND VISUAL SPACE Article Open access 27 February 2023 WALKING MODULATES VISUAL DETECTION PERFORMANCE ACCORDING TO

STRIDE CYCLE PHASE Article Open access 07 March 2024 INTRODUCTION Echolocation is the ability to acquire spatial information from the reflection and the timber of sounds. It is well known

that humans can develop such skills1,2,3, which can be learned by blind4,5 and sighted individuals6,7. In the last few years a number of studies have investigated the underpinning of sounds

that can be used for locomotion in the absence of vision, and most of these studies have tested echolocation. Rosenblum _et al_.8 showed how sighted blindfolded participants were able to

detect and walk up to an estimated position of a wall, finding that participants were more accurate when emitting sounds during motion than when standing still, for four distances (around 90

cm, 180 cm, 275 cm and 365 cm). Kolarik _et al_.9, assessed the ability of blindfolded sighted people to detect and circumvent an obstacle using mouth click sounds, compared to visual

guidance. They showed that auditory information was sufficient to guide participants around the obstacle without collision, but there was an increase of movement time and the number of

velocity corrections (number of changes in in forward velocity along the path) compared to visual guidance. Moreover, in a second study, Kolarik _et al_.10, used the same task to compare the

performance between blindfolded sighted, blind non-echolocators and one blind echolocator using both self-generated sounds and an electronic sensory substitution device (SSD). They found

that using audition, blind non-echolocators navigated better than blindfolded sighted with fewer collisions, lower movement times, fewer velocity corrections and greater obstacle detection

range. Instead, the performance using a SSD between the two groups was comparable. The expert echolocator had better performance than the other two groups using self-generated clicks, but

was comparable to the other groups using SSD. All three groups gave 100% correct responses to detect and circumvent an obstacle using SSD. These findings support the hypothesis of

_enhancement_: vision loss leads to enhanced auditory spatial ability due to an extensive experience and reliance on auditory information11,12 and cortical reorganization13,14,15. Similar

results were found by Fiehler _et al_.16: when listening to pre-recorded binaural echolocation clicks generated while a person was walking along a corridor, blind expert echolocators

performed better than sighted novice participants in judging the main direction of the corridor (left, right or straight ahead). Even if sighted participants received training, their

performance was around chance level. Head movements during echolocation seem to have a crucial role5. Wallmeier and Wiegrebe17, showed how head rotations during echolocation can improve

performance in a complex environmental setting. They also reported that during echolocation participants tend to orient the body and head towards a specific location18. Here, we used the

task of Fiehler _et al_.16, but instead of using pre-recorded echolocation clicks, we asked participants to freely perform the task in a real environment, while recording their body motion.

Specifically, we installed inside a reverberant room a real corridor made of sound-reflecting panels. We asked participants to judge a spatial property of the corridor, i.e. whether it was

turning left, right or had a dead end. Importantly, participants were free to stop anywhere they wished when guessing the shape of the corridor. First, we wanted to test whether novice

blindfolded sighted participants were able to perform such a task. We also wished to compare whether the performance obtained in the study of Fiehler _et al_.16 was possibly influenced by

the use of binaural recordings. We hypothesized, in particular, that understanding spatial properties of unknown spaces is modulated by behavioural variables, such as body motion. If this is

true, then observing echolocation in real setups might extend knowledge about how this skill is developed with information that virtual setups a priori may exclude. More generally, we

sought for body movements that can be overt signs of optimal echolocation skills. To assess this, we used a motion capture system to record and code the kinematics of the participants who

walked along a corridor while echolocating. First, we took into account several behavioral variables: the average and variability of velocity, the duration of motion, the position of each

participant in the room at the moment of the response, and the motion of the head. Then we tested whether these variables correlated with the percentage of correct responses in the three

possible shapes, i.e. turn left, right or straight ahead. We derived a predictive model that shows how the probability of correct guessing is accounted for by the variables explaining most

of the behavioural variance. Finally, we recorded a video of the participants while they were performing echolocation to monitor the task. From the video we were able to extract the audio

and made a qualitative analysis of a typical participant, since the main scope of this work regards evaluating kinematics during echolocation. MATERIALS AND METHODS PARTICIPANTS Nine sighted

participants (4 females, with an average age of 27.5 years, SD = 7 years) were recruited. All participants gave written informed consent before starting the test. All participants took an

audiometric test to check for possible hearing impairments. The test was performed automatically by an audiometer (Amplaid A1171), by presenting tones that ranged between 200 Hz and 12 KHz

at a stable intensity of 20 dB, while asking the participant to press a button when the tone became audible. One of the participants did not pass the test and was excluded from the

experiment. None of the participants had prior experience in using self-generated sounds to perceive objects. The study followed the tenets of the Declaration of Helsinki and was approved by

the ethics committee of the local health service (Comitato Etico, ASL 3, Genova). STIMULI The task was performed in a reverberant room (4.6 m × 6 m × 4 m). The floor of the room made by

parquet, was completely covered by a 5 mm polyester carpet. The walls were made of concrete, more than 50 cm thick and plastered. The room had three doors of solid wood and one window,

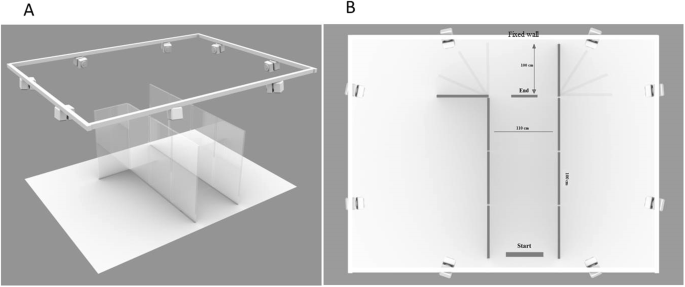

covered by solid wood panels. The high ceiling (about 4 m) was flat. The T60 of the reverberant room was approximately 1.4 seconds. We built a corridor (see Fig. 1) composed of 8 panels of

poly-methyl methacrylate (PMMA). They were 2 m high and 1 m wide and were placed vertically next to each other. Each panel was supported by a metal frame positioned outside the corridor, so

as not to interfere with the walking task or with sound reflections. The metal frame was provided with wheels to facilitate the movement of the panels between the trials. The corridor was

created along the smaller side of the room, so as to use one of the walls (made of concrete) of the room as the end of the corridor; to create the side walls we used the panels of PMMA, 4

for each side. The corridor was 4 m long and 1.1 m wide and was set in three different shapes: opened to the left, to the right or closed from both sides (see Fig. 1B for exact dimensions).

To record the body kinematics we used an infrared camera motion system with eight cameras (frame rate 100 Hz, Vicon Motion Systems, Oxford, UK). The cameras were place along the perimeter of

the room at about 3 meters high (see Fig. 1), so that at least 3 cameras could focus on every corner of the corridor at the same time to ensure optimal recordings. Each participant was

outfitted with eight lightweight retro-reflective hemispheric markers (1 cm in diameter). We arranged three markers on the head to form a triangle with the marker on the forehead as tip; one

marker on each shoulder, one at the level of the breastbone, one on the right elbow and one on the right wrist (see Fig. 2). A model of each subject’s marker placement then was calibrated

using Vicon’s Nexus® software. However, the markers on the elbow and the wrist were not used during the data analysis, because none of the participants used finger snaps as echolocation

signals. After the data acquisition, each trial was individually inspected to check the correct uploading of the model after the pre-processing. We applied a low-pass Butterworth filter with

a 6 Hz cut-off, to smooth the frequency response. Variables related to head and body movements were computed with custom-written Matlab® scripts. The definitions of the variables are

presented in Table 1. Specifically, Average velocity (AV) and variability of velocity (VV) were computed by excluding the point-to-point trajectory of the participants: only the starting

point and the end point location were considered and divided by the total motion duration (MD). Then, the Distance to the Left Side (DLS) and the Distance to the Front (DF) helped to

reconstruct where the participant stopped with respect to the end of the corridor, highlighting if the stop point was closer to either wall. Finally, Head movement (HM), Head Movement on the

Left (HML) and Head Movement on the Right (HMR) accounted for head motion. HM helped to give a general idea of the amount of head movement made by the participant, whereas HML and HMR

informed about how often the lateral portions of space is explored. Head position was accounted for by the HML/HMR variable if the head was to the left/right of the sagittal plane

perpendicular to the shoulders, taken as relative reference. We located a Go Pro camera (HERO4) between the two Vicon cameras on the left wall (Fig. 1A), so that we could record all the area

of the corridor. PROCEDURE The participants were instructed on how to generate echolocation signals using mouth clicks, taking as an example the clicks generated by expert echolocators

(recordings were found in the supporting information of the paper by Thaler _et al_.19). They practiced for few minutes to generate sounds as similar as possible to each other. Before

entering the room of the experiment, all participants were blindfolded, to prevent them gaining prior knowledge of the structure of the room and the set up. First, each participant performed

a training session, in which they were brought by the experimenter to the ‘Start’ point (see Fig. 1B) of the corridor. From that position they were instructed to walk straight through the

corridor. The participant was free to move: however, in this training session, a heavy box (0.8 × 0.5 m × 0.5m) was placed on the ground at the ‘End’ point (see Fig. 1B), 1m from the end

wall, to force the participant to stop and estimate in 20 s the shape of the corridor: opening to the left, to the right or closed from both sides. The participant was not aware of the

relative position of the box with respect to the end wall and was always forced to respond. If the participant touched the walls of the corridor, the trial was repeated. Each participant

performed 27 trials, 9 for each corridor configuration. No feedback was provided about the correct response. The experimental and the training sessions were identical, except for the stop

point. The stop point was not present in the experimental session: here, the experimenter asked each participant to stop as soon as they understood the shape of the corridor and to give

right away the answer. The trial was repeated if the participant touched the walls of the corridor. Also in the experimental session, each participant performed 27 trials, 9 for each

corridor configuration. RESULTS WE ANALYZED THE KINEMATICS OF HEAD AND BODY FOR THE EXPERIMENTAL CONDITION ONLY The participants were able to perform the task without collision on 75% of the

trials (SD = 15). In Table 2, we reported the average and the standard deviation of the considered variables (Table 1). To understand the relationship between the behavioural variables

shown in Table 1, we ran a factorial analyzes. Useing a _varimax rotation_20 based on the sum of the variance of normalized body weight squares. We extracted four factors that explained most

of the variance in the data (64.2%, χ2 = 2.7, p = 0.26). We defined these factor as Time, Head exploration, Head and Space. Figure 3 shows the outcome of the factorial analysis, namely the

weights of the changes of all the variables on the four factors, i.e. the contribution of each variable to the underlying factor. We found that the first factor included mainly the variables

AV, VV and MD, that are variables related to the time dimension (TIME factor). The second factor was mainly influenced by variables related to the exploration with the head (HEAD

EXPLORATION factor): HML, HMR, with a contribution from the spatial factor DF. The third factor was found to be almost purely related to head movements (HEAD factor). Instead, the fourth

factor is related to the space domain (SPACE factor) because of the strong weight of the DF variable. Considering performance, we checked whether the percentage of correct responses (i.e.

the correct guesses about the corridor shape) was beyond chance level (i.e. 33%) for both the training and the experimental session (see Fig. 4). The percentage of correct responses in the

training session was 68.28% (t-test, t7 = 6.7, p < 0,001) and for the experimental session was 58% (t-test, t7 = 2.71, p = 0,03), both significantly above the chance level. Moreover, we

calculated whether there was a relationship between the performance and the type of shape of the corridor. One-way Anova with factor SHAPE did not show any significant difference (F2,14 =

1.48, p = 0.26). Then we tested whether the kinematic variables were related to the participants’ ability to echolocate. To this aim, we used the scores of each factor obtained from the

factorial analysis and the variable CORRIDOR’S SHAPE (open to the left, open to the right, closed) as independent variables in a logistic regression model21 with RESPONSE (correct and

incorrect) in the echolocation task as dependent variable. We found a significant main effect for factor HEAD EXPLORATION (χ2 = 8.027, p = 0.004), factor HEAD (χ2 = 4.54, p = 0.03) and

factor SPACE (χ2 = 14.14, p = 0.0001). Only one significant interaction was found CORRIDOR’S SHAPE x factor HEAD EXPLORATION x factor HEAD x factor SPACE (χ2 = 6.15, p = 0.04). The TIME

factor did not reveal itself to be linked to the RESPONSE. Given the graphic limitation in representing the significant interaction, in Fig. 5 we plotted the probability of correct response

predicted by the model for each level of the variable CORRIDOR’S SHAPE in relation of each single factor, i.e. the variation of the slope is related to the variation of the probability to

give a correct response. Importantly, the strongest predictor of performance (i.e. the steepest slope) was the SPACE FACTOR. More specifically, from Fig. 5 we derive that the highest

probability of correctly guessing the corridor shape is associated with negative values of the SPACE FACTOR, therefore with larger values of the DF variable and lower values of the MD

variable: the earlier the spontaneous stop point (i.e. the farther away from the end wall), the better the guess. Specifically, the participants stopped on average at 0.65 m (sd = 0.29) from

the end of the corridor. To further check whether this result was unbiased with respect to the corridor shape, we then calculated whether there was a significant difference of DF in

function of the corridor shape (Fig. 6). As expected, a one-way Anova with factor SHAPE did not show any significant difference (F2,14 = 2.35, p = 0.12). Since the HEAD EXPLORATION factor

appears to be important, we hypothesized that such factor could be intertwined with the vocal emissions. That is, if acoustic knowledge about the environment (specifically about the shape of

the corridor) is gained through head exploration, then a significant part of clicks (i.e. more than 50%) should be emitted while the head is turning. Therefore, in a sub-group of

participants (n = 4) we investigated whether the “vocal emissions” were used during the exploration with the head. We considered significant head motion both when the participant was walking

and when he/she was standing still. Specifically, we synchronized the beginning of the audio recordings to the head motion (variable HM of Table 1) and we considered the intervals in which

the head had an aperture greater than ± 5 degrees with respect to the sagittal plane. For each interval we counted the numbers of vocal emissions to calculate the percentage of clicks

produced during head motion (example of trial Fig. 7A). Most of the “vocal emissions” (62% - Fig. 7B) were produced during the movement of the head (t-test two tails against the chance level

of 50%, t = 3.22, p = 0.048). DISCUSSION The novelty of this study lies in the fact that, in addition to identifying kinematic variables of echolocation, we have identified the influence

and the interaction of each kinematic variables with echolocation performance. The major points of this study were to test: (1) whether sighted people are able to perform an echolocation

task in a complex real environment, without using recordings. We tried to maintain the environment as much ecological as possible. i.e. performing the task in a reverberant room, without no

sound-absorbing materials attenuating internal or external noises, and using different kind of sound-reflecting materials (the wall along the corridor were made by Plexiglas, instead the

wall at the end of the corridor was made by concrete); (2) whether some behavioural variables, such as average and variability of velocity, motion duration, distance from the end wall, head

movements etc. (Table 1), might be correlated with the percentage of correct responses in naïve sighted echolocators into such a real environment. Previous studies already have shown that

sighted people were able to learn echolocation in a brief amount of time, by performing tasks as detection, size perception or acuity discrimination6,7,22,23 that involve sound reflections

from a restrained set of objects. None of these studies tested the ability to perform in more complex scenarios, with reflecting walls in multiple configurations and by granting freedom to

move the whole body. Our first result is that sighted people are able to perform a complex auditory task such as understanding the shape of a corridor. Unlike the behavioral results of

Fiehler _et al_.16, where the performance of sighted people was at chance level, we found that sighted people do accomplish such a task. Probably the different result is due to the modality

in which the task was completed: in Fiehler’s study, binaural recordings were used (since the main purpose of the study was to investigate the neural correlate using fMRI); instead, in our

study the participants could link acoustic, proprioceptive and vestibular perceptual cues, therefore possibly integrating multiple sources of information17 and sensory-motor association24.

It should be emphasized that, although we have tried to make the experiment as much realistic as possible under real conditions, the experiment presents some limitations such as the use of

the same reflecting material for the side walls; the use of different kind of materials could increase or decrease the performance. We speculate that if we had used less sound-reflecting

materials, such as plasterboards, the participants would have obtained less acoustic information from the echoes. In this study we chose to obtained as much information as possible from

reflected sounds because all our participants were completely naïve to echolocation tasks. We do not exclude that experienced participants might echolocate well even with less

sound-reflecting walls. In this vein, blind persons, that start undergoing echolocation training, with special emphasis on people who have recently lost their sight, might take benefit from

setup such as the one considered in this study. The limited size of the room did not allow to change the starting point: and in some occasions the participants could have relied on other

information besides echoes to reach the endpoint, like for example count the steps to made to reach the stop point. This is true especially for the training session in which they path to

cover was always identical for each trial. This is not completely for the experimental session because in the last case the task was to stop as soon as the participant understood the shape

of the corridor and not to reach by her/himself the end point, settled in the training session, so the path covered could vary considerably between participants and trials. Furthermore, in a

sub-sample of participants, we checked when the “vocal emissions” were produced. We found that the majority of signals were associated with head motion. This result suggests that active

exploration of the environment with the head was associated to an acoustic exploration (“vocal emissions”). This result is not excluding that knowledge can be gained when the head is

clicking along the sagittal plane, but suggests that the act of emitting clicks is more frequently performed when the head is rotated. Indirectly, this explains why head rotation modulated

the correctness of responses: it can be reasonably argued that head motion is relevant to gain knowledge about environmental features, because it is during head motion that vocal emissions

are produced and therefore acoustic feedback is obtained. Note that our participants received no prior instructions about the role of the head, therefore our results describe spontaneous

exploration strategies. Regarding the kinematics data, based on our results, we identify three main factors that might influence echolocation performance: time, space and head motion. MOTION

DURATION SEEMS NOT TO INFLUENCE ECHOLOCATION PERFORMANCE Our first behavioural factor was related to time. It is positively correlated with the average velocity and its variations and, as

expected, negatively with the duration of motion. We did not find an influence of completion time in the amount of correct responses, that is fast body motion seems not related to a better

understanding of an unknown environment. A similar result appears when evaluating travel aids such as white canes, that on one hand reduce collisions but do not necessarily decrease task

completion time25. As well, completion time seems the weakest predictor of performance when both blind and visually impaired persons get explicit feedback on spatial properties of unknown

paths26. Considering that head motion seems important to correctly guess the corridor shape, we interpret that exploring the acoustical properties by turning the head takes time: the amount

of information with head exploration may force the participant to pay a price in terms of completion time, that therefore seems not relevant as a performance predictor. Further investigation

on how effectively the body moves, or stops and then spends time, while acquiring information, is necessary to clarify this aspect. On the same lines, velocity seems not to be related to

performance: our two variables related to the average body velocity and its variations are only accounted for by the time factor, which does not predict performance. As a counter-proof, they

are absent from any other factor having an effect on performance. Our results find a resonance in current rehabilitation practices, where results measured by travel time are somewhat

inconclusive and do not reflect the obtained benefits of orientation and mobility treatments27. HEAD MOTION APPEARS CRUCIAL FOR CORRECT ECHOLOCATION Our second behavioral factor was related

to head exploration. It is positively correlated with the average angle when the head is rotated to the left of the body midline (i.e. net of how the shoulders are rotated) and, as expected,

negatively when the head is only rotated to the right. Interestingly, the factor accounts for almost equivalent amounts of these two variables, meaning that the influence of head motion

seems not to be biased by some sort of lateralization. This well reflects our experimental setup, where participants started from the center of the corridor and had equal chance of finding a

right-ended or left-ended corridor. Intuitively, they did not need to turn their head more to the right or to the left. When investigating the link between the factor related to head motion

and performance, higher values of this factor reflect a probabilistic higher understanding of the environment. Conversely, when values of the factor become negative, the guess rate is close

to chance level: the wider the lateral head movements, the better the guess. Similar considerations hold for the third behavioural variable, mainly related to the mean head rotation angle,

that is the only variable with a significant weight. This factor highlights the importance that head movements have during echolocation in line with previous results independently from the

environment and the kind of task performed5,18. Taken together, these results are important because they emphasize that the head has a key role. Active head exploration therefore seems

necessary to understand structural properties from echolocation. A limitation of our study is that it treats sighted persons only. The link between active head motion and echolocation

performance of echolocation experts is not treated here. Although we cannot claim that our results are immediately applicable to blind participants, that in general show reduced head motion

compared to sighted persons28, the head could still play a role. Whether the amount of head motion, or the density of vocal emissions during head motion are important factors for

echolocators it is still unknown. Importantly, other evidence suggest to treat sensorial deprived children with exercises based on head motion29. Therefore, teaching blind persons to

actively move their head while echolocating might be useful to induce a behaviour correlated with the collection of greater acoustic information. SPACE: WE DON’T STOP BY CHANCE We found a

significant link between the spontaneous stop point and the probability of correct guessing, with people stopping earlier as more reliable predictors of the corridor shape. This result may

serve, together with head behavior, as a guideline for orientation and mobility practitioners to evaluate the improvement in the use of echolocation to navigate in the environment. The

average distance from the bottom of the corridor was 0.65 m (sd = 0.29): interestingly, almost the same distance was found by Kolarik _et al_.9 when the obstacle was located along the body

midline (0.61 m). Our task was different than in Kolarik _et al_.9: in that task the person had to stop when detecting an obstacle (assumed to exist), while in our task one or more lateral

obstacles (i.e. the presence or absence of one or two apertures on the end sides of the corridor) could be present or not, while the end of the corridor was always in a fixed position.

Nevertheless, we might start assuming that the distance to which spatial properties of an object reveal themselves by echolocation may not be a function of the sound environment. Further

research is necessary to discover acoustical spatial invariants30. Finally, the configuration of the corridor did not have a main effect on performance. The shape of the corridor therefore

did not significantly bias the guess rate. However, we found an interaction with all the factors influencing it, suggesting that the structure of the environment seems to have an influence

on how the body moves, but not on the final outcome of the task. This is interesting, since it could hint that body motion reflects spatial structures _before_ these are explicitly

externalized. Purely looking at performance, then, in both our training and experimental session the percentage of correct guesses was on average double than that expected by chance.

Although not significant, the experimental session exhibited a slightly lower performance due to the absence of the physical stop constraint. Therefore, free motion seems to add ecological

validity to our setup without paying a price in terms of understanding of spatial properties. A practical implication of this result is that rehabilitation practitioners might use

stop-distance from the walls in echolocation tasks as one proxy for a successful training, or at least as a sign that guesses are not being given by chance. GENERAL CONCLUSIONS Two main

contributions emerge from this study: * (1). It is the first time that a study provides information about the kinematics of echolocation for sighted participants in an direction

discrimination task. The information includes variables such as average velocity, motion duration and head exploration, which we demonstrate to be important factors to assess the efficacy of

daily echolocation-based navigation. More importantly, this is the first study that correlates task performance with variables linked to body motion. Our analysis may be a useful baseline

for future studies regarding the effects of echolocation training, or for comparisons between kinematic models of trained versus untrained blind echolocators. * (2). The accuracy of

responses in an echolocation task does not depend on how long or fast sighted naïve echolocators move the body; rather, the accuracy depends on the distance from the object to be detected

and on how often the head explores the space _while_ producing vocal emissions. Our results can explain why in Fiehler _et al_.16 sighted participants were not able to discriminate among

path directions. It is entirely possible that participants that stationary listen to pre-recorded echolocation clicks (with no possibility of free moving when performing an echolocation

task) cannot obtain accurate spatial information. This point was already discussed by Milne _et al_.5. Overall, this study adds new information about behavior during echolocation. It might

be useful in perspective to possible rehabilitative solution for blind individuals. Our results suggest that in addition on focusing on the type/quality of clicks produced during

echolocation, attention should be paid to the movements and the amount of active exploration that the body is doing. Specifically, while travel time seems not to be important to assess

echolocation skills, rehabilitation practitioners may work on improving their trainee’s head motions, which are so important in better obtaining information about acoustic spatial

properties, and may observe the walking trajectories of their trainees during echolocation, that may hint whether a tendency to correctly guess spatial cues is occurring. Knowing which

movements are most suitable and how to use them can help to speed up the learning of a technique such as echolocation. REFERENCES * Kolarik, A. J., Cirstea, S., Pardhan, S. & Moore, B.

C. J. A summary of research investigating echolocation abilities of blind and sighted humans. _Hear. Res._ 310, 60–8 (2014). Article Google Scholar * Supa, M., Cotzin, M. & Dallenbach,

K. M. ‘Facial Vision’: The Perception of Obstacles by the Blind. _Am. J. Psychol._ 57, 133 (1944). Article Google Scholar * Thaler, L. & Goodale, M. A. Echolocation in humans: an

overview. _Wiley Interdiscip. Rev. Cogn. Sci._ 7, 382–393 (2016). Article Google Scholar * Thaler, L., Milne, J. L., Arnott, S. R., Kish, D. & Goodale, M. A. Neural correlates of

motion processing through echolocation, source hearing, and vision in blind echolocation experts and sighted echolocation novices. _J. Neurophysiol._ 111, 112–27 (2014). Article CAS Google

Scholar * Milne, J. L., Goodale, M. A. & Thaler, L. The role of head movements in the discrimination of 2-D shape by blind echolocation experts. _Atten. Percept. Psychophys._ 76,

1828–37 (2014). Article Google Scholar * Teng, S. The acuity of echolocation: Spatial resolution in the sighted compared to expert performance. _J. Vis. Impair. Blind._ 105, 20–32 (2011).

PubMed PubMed Central Google Scholar * Tonelli, A., Brayda, L. & Gori, M. Depth Echolocation Learnt by Novice Sighted People. _PLoS One_ 11, e0156654 (2016). Article Google Scholar

* Rosenblum, L. D., Gordon, M. S. & Jarquin, L. Echolocating Distance by Moving and Stationary Listeners. _Ecol. Psychol._ 12, 181–206 (2010). Article Google Scholar * Kolarik, A. J.,

Scarfe, A. C., Moore, B. C. J. & Pardhan, S. An assessment of auditory-guided locomotion in an obstacle circumvention task. _Exp_. _Brain Res_. https://doi.org/10.1007/s00221-016-4567-y

(2016). Article Google Scholar * Kolarik, A. J., Scarfe, A. C., Moore, B. C. J. & Pardhan, S. Blindness enhances auditory obstacle circumvention: Assessing echolocation, sensory

substitution, and visual-based navigation. _PLoS One_ 12, 1–25 (2017). Article Google Scholar * Voss, P., Tabry, V. & Zatorre, R. J. Trade-Off in the Sound Localization Abilities of

Early Blind Individuals between the Horizontal and Vertical Planes. _J. Neurosci._ 35, 6051–6056 (2015). Article Google Scholar * Kolarik, A. J., Cirstea, S. & Pardhan, S. Evidence for

enhanced discrimination of virtual auditory distance among blind listeners using level and direct-to-reverberant cues. _Exp. brain Res._ 224, 623–33 (2013). Article Google Scholar * Voss,

P. & Zatorre, R. J. Organization and reorganization of sensory-deprived cortex. _Curr. Biol._ 22, R168–R173 (2012). Article CAS Google Scholar * Kupers, R. & Ptito, M.

Compensatory plasticity and cross-modal reorganization following early visual deprivation. _Neurosci. Biobehav. Rev._ 41, 36–52 (2014). Article Google Scholar * Collignon, O. _et al_.

Impact of blindness onset on the functional organization and the connectivity of the occipital cortex. _Brain_ 136, 2769–2783 (2013). Article Google Scholar * Fiehler, K., Schütz, I.,

Meller, T. & Thaler, L. Neural Correlates of Human Echolocation of Path Direction During Walking. _Multisens. Res._ 28, 195–226 (2015). Article Google Scholar * Wallmeier, L. &

Wiegrebe, L. Ranging in Human Sonar: Effects of Additional Early Reflections and Exploratory Head Movements. _PLoS One_ 9, e115363 (2014). Article ADS Google Scholar * Wallmeier, L. &

Wiegrebe, L. Self-motion facilitates echo-acoustic orientation in humans. _R_. _Soc_. _Open Sci_. https://doi.org/10.1098/rsos.140185 (2014). Article Google Scholar * Thaler, L., Arnott,

S. R. & Goodale, M. A. Neural correlates of natural human echolocation in early and late blind echolocation experts. _PLoS One._ 6, e20162 (2011). Article ADS CAS Google Scholar *

Kaiser, H. F. The varimax criterion for analytic rotation in factor analysis. _Psychometrika_. https://doi.org/10.1007/BF02289233 (1958). Article Google Scholar * Hosmer, D. W. &

Lemeshow, S. _Applied Logistic Regression Second Edition_. _Applied Logistic Regression_ https://doi.org/10.1002/0471722146 (2004). Book Google Scholar * Thaler, L., Wilson, R. C. &

Gee, B. K. Correlation between vividness of visual imagery and echolocation ability in sighted, echo-naïve people. _Exp. brain Res._ 232, 1915–25 (2014). Article Google Scholar *

Schenkman, B. N. & Nilsson, M. E. Human Echolocation: Blind and Sighted Persons’ Ability to Detect Sounds Recorded in the Presence of a Reflecting Object. _Perception_ 39, 483–501

(2010). Article Google Scholar * Flanagin, V. L. _et al_. Human Exploration of Enclosed Spaces through Echolocation. _J. Neurosci._ 37, 1614–1627 (2017). Article CAS Google Scholar *

Kim, S. Y. & Cho, K. Usability and design guidelines of smart canes for users with visual impairments. _Int_. _J_. _Des_. (2013). * Kalia _et al_. Assessment of Indoor Route-finding

Technology for People Who Are Visually Impaired. _J_. _Vis_. _Impair_. _Blind_. https://doi.org/10.1016/j.drugalcdep.2008.02.002.A (2010). * Soong, G. P., Lovie-Kitchin, J. E. & Brown,

B. Does mobility performance of visually impaired adults improve immediately after orientation and mobility training? _Optom_. _Vis_. _Sci_. https://doi.org/10.1097/00006324-200109000-00011

(2001). Article CAS Google Scholar * Salem, O. H. & Preston, C. B. Head posture and deprivation of visual stimuli. _Am Orthopt J_, https://doi.org/10.3368/aoj.52.1.95 (2002). Article

Google Scholar * Daneshmandi, H., Majalan, A. S. & Babakhani, M. The comparison of head and neck alignment in children with visual and hearing impairments and its relation with

anthropometrical dimensions. _Phys. Treat. Phys. Ther. J._ 4, 69–76 (2014). Google Scholar * Fowler, C. A. Invariants, specifiers, cues: An investigation of locus equations as information

for place of articulation. _Percept_. _Psychophys_. https://doi.org/10.3758/BF03211675 (1994). Article CAS Google Scholar * Stevens, J. P. _Applied multivariate statistics for the social

sciences_. (Routledge, 2012). Download references ACKNOWLEDGEMENTS Authors would like to thank Marco Jacono for his help with kinematics measuring and Caterina Baccelliere for the help

provided during data collection. We thank also the Istituto Chiossone. AUTHOR INFORMATION AUTHORS AND AFFILIATIONS * Uvip, Unit for visually impaired people, Istituto Italiano di Tecnologia,

Genoa, Italy Alessia Tonelli & Claudio Campus * RBCS, Robotics, Brain and Cognitive science, Istituto Italiano di Tecnologia, Genoa, Italy Luca Brayda Authors * Alessia Tonelli View

author publications You can also search for this author inPubMed Google Scholar * Claudio Campus View author publications You can also search for this author inPubMed Google Scholar * Luca

Brayda View author publications You can also search for this author inPubMed Google Scholar CONTRIBUTIONS A.T. and L.B. conceived and designed the experiment. A.T. performed the experiment.

A.T. and C.C. data analysis. All authors wrote, edited the manuscript and gave final approval for publication. CORRESPONDING AUTHOR Correspondence to Alessia Tonelli. ETHICS DECLARATIONS

COMPETING INTERESTS The authors declare no competing interests. ADDITIONAL INFORMATION PUBLISHER’S NOTE: Springer Nature remains neutral with regard to jurisdictional claims in published

maps and institutional affiliations. RIGHTS AND PERMISSIONS OPEN ACCESS This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing,

adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons

license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a

credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted

use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/. Reprints and permissions ABOUT

THIS ARTICLE CITE THIS ARTICLE Tonelli, A., Campus, C. & Brayda, L. How body motion influences echolocation while walking. _Sci Rep_ 8, 15704 (2018).

https://doi.org/10.1038/s41598-018-34074-7 Download citation * Received: 19 January 2018 * Accepted: 08 October 2018 * Published: 24 October 2018 * DOI:

https://doi.org/10.1038/s41598-018-34074-7 SHARE THIS ARTICLE Anyone you share the following link with will be able to read this content: Get shareable link Sorry, a shareable link is not

currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing initiative KEYWORDS * Sighted Participants * Corridor Shape * Echolocation

Task * Correct Guess * Exploratory Head