- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

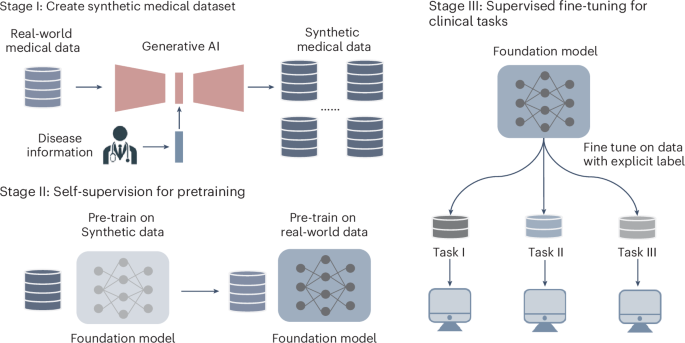

ABSTRACT Foundation models are pretrained on massive datasets. However, collecting medical datasets is expensive and time-consuming, and raises privacy concerns. Here we show that synthetic

data generated via conditioning with disease labels can be leveraged for building high-performing medical foundation models. We pretrained a retinal foundation model, first with

approximately one million synthetic retinal images with physiological structures and feature distribution consistent with real counterparts, and then with only 16.7% of the 904,170

real-world colour fundus photography images required in a recently reported retinal foundation model (RETFound). The data-efficient model performed as well or better than RETFound across

nine public datasets and four diagnostic tasks; and for diabetic-retinopathy grading, it used only 40% of the expert-annotated training data used by RETFound. We also support the

generalizability of the data-efficient strategy by building a classifier for the detection of tuberculosis on chest X-ray images. The text-conditioned generation of synthetic data may

enhance the performance and generalization of medical foundation models. Access through your institution Buy or subscribe This is a preview of subscription content, access via your

institution ACCESS OPTIONS Access through your institution Access Nature and 54 other Nature Portfolio journals Get Nature+, our best-value online-access subscription $32.99 / 30 days cancel

any time Learn more Subscribe to this journal Receive 12 digital issues and online access to articles $119.00 per year only $9.92 per issue Learn more Buy this article * Purchase on

SpringerLink * Instant access to full article PDF Buy now Prices may be subject to local taxes which are calculated during checkout ADDITIONAL ACCESS OPTIONS: * Log in * Learn about

institutional subscriptions * Read our FAQs * Contact customer support SIMILAR CONTENT BEING VIEWED BY OTHERS A FOUNDATION MODEL FOR GENERALIZABLE DISEASE DETECTION FROM RETINAL IMAGES

Article Open access 13 September 2023 A PORTABLE RETINA FUNDUS PHOTOS DATASET FOR CLINICAL, DEMOGRAPHIC, AND DIABETIC RETINOPATHY PREDICTION Article Open access 22 February 2025

SELF-SUPERVISED RETINAL THICKNESS PREDICTION ENABLES DEEP LEARNING FROM UNLABELLED DATA TO BOOST CLASSIFICATION OF DIABETIC RETINOPATHY Article 09 November 2020 DATA AVAILABILITY The main

data supporting the results in this study are available within the paper and its Supplementary Information. Data for pretraining can be accessed through the following weblinks: AIROGS

(https://airogs.grand-challenge.org/data-and-challenge), Kaggle EyePACS (https://www.kaggle.com/c/diabetic-retinopathy-detection), DDR (https://github.com/nkicsl/DDR-dataset), ODIR-2019

(https://odir2019.grand-challenge.org). Data for fine-tuning can be accessed through the following weblinks: IDRID

(https://ieee-dataport.org/open-access/indian-diabetic-retinopathy-image-dataset-idrid), MESSIDOR-2 (https://www.adcis.net/en/third-party/messidor2), APTOS-2019

(https://www.kaggle.com/competitions/aptos2019-blindness-detection/data), PAPILA (https://figshare.com/articles/dataset/PAPILA/14798004/1), Glaucoma Fundus

(https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/1YRRAC), ORIGA (https://www.kaggle.com/datasets/arnavjain1/glaucoma-datasets), AREDS

(https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000001.v3.p1), JSIEC (https://zenodo.org/record/3477553), Retina

(https://www.kaggle.com/datasets/jr2ngb/cataractdataset), REFUGE (https://ieee-dataport.org/documents/refuge-retinal-fundus-glaucoma-challenge), RIM-ONE-DL

(https://github.com/miag-ull/rim-one-dl?tab=readme-ov-file), CheXpert (https://stanfordmlgroup.github.io/competitions/chexpert/), Shenzhen Hospital CXR Set

(https://data.lhncbc.nlm.nih.gov/public/Tuberculosis-Chest-X-ray-Datasets/Shenzhen-Hospital-CXR-Set/index.html), TB Chest X-ray database

(https://www.kaggle.com/datasets/tawsifurrahman/tuberculosis-tb-chest-xray-dataset). CODE AVAILABILITY The code of RETFound-DE is available at https://github.com/Jonlysun/DERETFound (ref.

64), and an online interactive platform is available at http://fdudml.cn:12001. We used stable diffusion implemented by diffusers (https://github.com/huggingface/diffusers) for the backbone

and image generation. The heat maps were generated with GradCam (https://github.com/jacobgil/pytorch-grad-cam) and the _t_-SNE visualization was generated with tsne-pytorch

(https://github.com/mxl1990/tsne-pytorch). REFERENCES * Zhou, Y. et al. A foundation model for generalizable disease detection from retinal images. _Nature_ 622, 156–163 (2023). Article CAS

PubMed PubMed Central Google Scholar * Theodoris, C. V. et al. Transfer learning enables predictions in network biology. _Nature_ 618, 616–624 (2023). Article CAS PubMed PubMed

Central Google Scholar * Huang, Z. et al. A visual-language foundation model for pathology image analysis using medical Twitter. _Nat. Med._ 29, 2307–2316 (2023). Article CAS PubMed

Google Scholar * Zhang, X. et al. Knowledge-enhanced visual-language pre-training on chest radiology images. _Nat. Commun._ 14, 4542 (2023). Article CAS PubMed PubMed Central Google

Scholar * Moor, M. et al. Foundation models for generalist medical artificial intelligence. _Nature_ 616, 259–265 (2023). Article CAS PubMed Google Scholar * Krishnan, R., Rajpurkar, P.

& Topol, E. J. Self-supervised learning in medicine and healthcare. _Nat. Biomed. Eng._ 6, 1346–1352 (2022). Article PubMed Google Scholar * Mitchell, M., Jain, R. & Langer, R.

Engineering and physical sciences in oncology: challenges and opportunities. _Nat. Rev. Cancer_ 17, 659–675 (2017). Article CAS PubMed PubMed Central Google Scholar * Villoslada, P.,

Baeza-Yates, R. & Masdeu, J. C. Reclassifying neurodegenerative diseases. _Nat. Biomed. Eng._ 4, 759–760 (2020). Article PubMed Google Scholar * Rajpurkar, P. et al. AI in health and

medicine. _Nat. Med._ 28, 31–38 (2022). Article CAS PubMed Google Scholar * Ribaric, S., Ariyaeeinia, A. & Pavesic, N. De-identification for privacy protection in multimedia content:

a survey. _Signal Process. Image Commun._ 47, 131–151 (2016). Article Google Scholar * Chang, Q. et al. Mining multi-center heterogeneous medical data with distributed synthetic learning.

_Nat. Commun._ 14, 5510 (2023). Article CAS PubMed PubMed Central Google Scholar * Bond-Taylor, S., Leach, A., Long, Y. & Willcocks, C. G. Deep generative modelling: a comparative

review of vaes, gans, normalizing flows, energy-based and autoregressive models. _IEEE Trans. Pattern Anal. Mach. Intell._ 44, 7327–7347 (2021). Article Google Scholar * Kingma, D. P.

& Welling, M. Auto-encoding variational Bayes. Preprint at https://arxiv.org/abs/1312.6114 (2013). * Goodfellow, I. et al. Generative adversarial networks. _Commun. ACM_ 63, 139–144

(2020). Article Google Scholar * Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. _Adv. Neural Inf. Process. Syst._ 33, 6840–6851 (2020). Google Scholar *

Rombach, R., Blattmann, A., Lorenz, D., Esser, P. & Ommer, B. High-resolution image synthesis with latent diffusion models. In _Proc. IEEE/CVF Conference on Computer Vision and Pattern

Recognition_ 10684–10695 (IEEE, 2022). * Kazerouni, A. et al. Diffusion models in medical imaging: a comprehensive survey. _Med. Image Anal._ 88, 102846 (2023). Article PubMed Google

Scholar * Repecka, D. et al. Expanding functional protein sequence spaces using generative adversarial networks. _Nat. Mach. Intell._ 3, 324–333 (2021). Article Google Scholar * Shin, J.

E. et al. Protein design and variant prediction using autoregressive generative models. _Nat. Commun._ 12, 2403 (2021). Article CAS PubMed PubMed Central Google Scholar * Watson, J. L.

et al. De novo design of protein structure and function with RFdiffusion. _Nature_ 620, 1089–1100 (2023). Article CAS PubMed PubMed Central Google Scholar * Schmitt, L. T. et al.

Prediction of designer-recombinases for DNA editing with generative deep learning. _Nat. Commun._ 13, 7966 (2022). Article CAS PubMed PubMed Central Google Scholar * Godinez, W. J. et

al. Design of potent antimalarials with generative chemistry. _Nat. Mach. Intell._ 4, 180–186 (2022). Article Google Scholar * Huang, X. et al. The landscape of mRNA nanomedicine. _Nat.

Med._ 28, 2273–2287 (2022). Article CAS PubMed Google Scholar * Chen, Z. et al. A deep generative model for molecule optimization via one fragment modification. _Nat. Mach. Intell._ 3,

1040–1049 (2021). Article PubMed PubMed Central Google Scholar * Zhong, W., Yang, Z. & Chen, C. Y. C. Retrosynthesis prediction using an end-to-end graph generative architecture for

molecular graph editing. _Nat. Commun._ 14, 3009 (2023). Article CAS PubMed PubMed Central Google Scholar * Das, P. et al. Accelerated antimicrobial discovery via deep generative models

and molecular dynamics simulations. _Nat. Biomed. Eng._ 5, 613–623 (2021). Article CAS PubMed Google Scholar * Kanakasabapathy, M. K. et al. Adaptive adversarial neural networks for the

analysis of lossy and domain-shifted datasets of medical images. _Nat. Biomed. Eng._ 5, 571–585 (2021). Article PubMed PubMed Central Google Scholar * Ozyoruk, K. B. et al. A

deep-learning model for transforming the style of tissue images from cryosectioned to formalin-fixed and paraffin-embedded. _Nat. Biomed. Eng._ 6, 1407–1419 (2022). Article CAS PubMed

Google Scholar * DeGrave, A. J. et al. Auditing the inference processes of medical-image classifiers by leveraging generative AI and the expertise of physicians. _Nat. Biomed. Eng._

https://doi.org/10.1038/s41551-023-01160-9 (2023). * Cao, R. et al. Label-free intraoperative histology of bone tissue via deep-learning-assisted ultraviolet photoacoustic microscopy. _Nat.

Biomed. Eng._ 7, 124–134 (2023). Article CAS PubMed Google Scholar * Nichol, A. et al. Glide: towards photorealistic image generation and editing with text-guided diffusion models.

Preprint at https://arxiv.org/abs/2112.10741 (2021). * Ramesh, A. et al. Zero-shot text-to-image generation. In _Proc. International Conference on Machine Learning_ 8821–8831 (PMLR, 2021). *

Radford, A. et al. Learning transferable visual models from natural language supervision. In _Proc. International Conference on Machine Learning_ 8748–8763 (PMLR, 2021). * Kather, J. N. et

al. Medical domain knowledge in domain-agnostic generative AI. _npj Digit. Med._ 5, 90 (2022). Article PubMed PubMed Central Google Scholar * Burlina, P. M. et al. Assessment of deep

generative models for high-resolution synthetic retinal image generation of age-related macular degeneration. _JAMA Ophthalmol._ 137, 258–264 (2019). * Yoon, J. et al. EHR-Safe: generating

high-fidelity and privacy-preserving synthetic electronic health records. _npj Digit. Med._ 6, 141 (2023). Article PubMed PubMed Central Google Scholar * Trabucco, B., Doherty, K.,

Gurinas, M. & Salakhutdinov, R. Effective data augmentation with diffusion models. In _Proc. International Conference on Learning Representations_ (ICLR, 2024). * Zhang, A. et al.

Shifting machine learning for healthcare from development to deployment and from models to data. _Nat. Biomed. Eng._ 6, 1330–1345 (2022). Article PubMed Google Scholar * Chen, R. J. et

al. Synthetic data in machine learning for medicine and healthcare. _Nat. Biomed. Eng._ 5, 493–497 (2021). Article PubMed PubMed Central Google Scholar * DuMont Schütte, A. et al.

Overcoming barriers to data sharing with medical image generation: a comprehensive evaluation. _npj Digit. Med._ 4, 141 (2021). Article PubMed PubMed Central Google Scholar * _World

Report on Vision_ (World Health Organization, 2019). * Cen, L. P. et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. _Nat.

Commun._ 12, 4828 (2021). Article CAS PubMed PubMed Central Google Scholar * Alimanov, A. & Islam, M. B. Denoising diffusion probabilistic model for retinal image generation and

segmentation. In _Proc. IEEE International Conference on Computational Photography_ 1–12 (IEEE, 2023). * Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image

recognition at scale. In _Proc. International Conference on Learning Representations_ (ICLR, 2021). * He, K. et al. Masked autoencoders are scalable vision learners. In _Proc. IEEE/CVF

Conference on Computer Vision and Pattern Recognition_ 16000–16009 (IEEE, 2022). * Karthik, M. & Sohier, D. _APTOS 2019 Blindness Detection_ (Kaggle, 2019). * Porwal, P. et al. Idrid:

diabetic retinopathy–segmentation and grading challenge. _Med. Image Anal._ 59, 101561 (2020). Article PubMed Google Scholar * Decencière, E. et al. Feedback on a publicly distributed

image database: the Messidor database. _Image Anal. Stereol._ 33, 231–234 (2014). * Kovalyk, O. et al. PAPILA: dataset with fundus images and clinical data of both eyes of the same patient

for glaucoma assessment. _Sci. Data_ 9, 291 (2022). Article PubMed PubMed Central Google Scholar * Zhang, Z. et al. Origa-light: an online retinal fundus image database for glaucoma

analysis and research. In _Proc. Annual International Conference of the IEEE Engineering in Medicine and Biology_ 3065–3068 (IEEE, 2010). * Irvin, J. et al. Chexpert: a large chest

radiograph dataset with uncertainty labels and expert comparison. In _Proc. AAAI Conference on Artificial Intelligence_ Vol. 33 590–597 (AAAI, 2019). * Jaeger, S. et al. Two public chest

X-ray datasets for computer-aided screening of pulmonary diseases. _Quant. Imaging Med. Surg._ 4, 475–477 (2014). PubMed PubMed Central Google Scholar * Rahman, T. et al. Reliable

tuberculosis detection using chest X-ray with deep learning, segmentation and visualization. _IEEE Access_ 8, 191586–191601 (2020). Article Google Scholar * Peng, W., Adeli, E., Zhao, Q.

& Pohl, K. M. in _Medical Image Computing and Computer Assisted Intervention_ 14–24 (MICCAI, 2023). * Eschweiler, D. et al. Denoising diffusion probabilistic models for generation of

realistic fully-annotated microscopy image datasets. _PLoS Comput. Biol._ 20, e1011890 (2024). Article CAS PubMed PubMed Central Google Scholar * Ktena, I. et al. Generative models

improve fairness of medical classifiers under distribution shifts. _Nat. Med._ 30, 1166–1173 (2024). Article CAS PubMed PubMed Central Google Scholar * Bachmann, R., Mizrahi, D.,

Atanov, A. & Zamir, A. Multimae: multi-modal multi-task masked autoencoders. In _Proc. European Conference on Computer Vision_ 348–367 (Springer, 2022). * Shumailov, I. et al. AI models

collapse when trained on recursively generated data. _Nature_ 631, 755–759 (2024). Article CAS PubMed PubMed Central Google Scholar * Yang, Y. et al. The limits of fair medical imaging

AI in real-world generalization. _Nat. Med_. 30, 2838–2848 (2024). * de Vente, C. et al. AIROGS: artificial intelligence for robust glaucoma screening challenge. _IEEE Trans. Med. Imaging_

43, 542–557 (2024). * van den Oord, A., Vinyals, O. & Kavukcuoglu, K. Neural discrete representation learning. In _Proc. 31st Conference on Neural Information Processing Systems_

6309–6318 (NIPS, 2017). * Song, Y. et al. Score-based generative modeling through stochastic differential equations. In _Proc. International Conference on Learning Representations_ (ICLR,

2021). * Selvaraju, R. R. et al. Grad-cam: visual explanations from deep networks via gradient-based localization. In _Proc. IEEE International Conference on Computer Vision_ 618–626 (IEEE,

2017). * Yuqi. Controllable generative model enables high data efficiency for building medical foundation model. _GitHub_ https://github.com/Jonlysun/DERETFound (2024). Download references

ACKNOWLEDGEMENTS We acknowledge support for this work provided by the National Natural Science Foundation of China (grant numbers U2001209, 62372117 and 62472102) and the Natural Science

Foundation of Shanghai (grant number 21ZR1406600). The computations in this research were performed using the CFFF platform of Fudan University. The AREDS dataset used for the analyses

described in this paper was obtained from the Age-Related Eye Disease Study (AREDS) Database found at https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000001.v3.p1

through dbGaP accession number phs000001.v3.p1. Funding support for AREDS was provided by the National Eye Institute (N01-EY-0-2127). We thank the AREDS participants and the AREDS Research

Group for their valuable contribution to this research. AUTHOR INFORMATION Author notes * These authors contributed equally: Yuqi Sun, Weimin Tan. AUTHORS AND AFFILIATIONS * Shanghai Key

Laboratory of Intelligent Information Processing, School of Computer Science, Fudan University, Shanghai, China Yuqi Sun, Weimin Tan, Zhuoyao Gu, Ruian He, Siyuan Chen, Miao Pang & Bo

Yan Authors * Yuqi Sun View author publications You can also search for this author inPubMed Google Scholar * Weimin Tan View author publications You can also search for this author inPubMed

Google Scholar * Zhuoyao Gu View author publications You can also search for this author inPubMed Google Scholar * Ruian He View author publications You can also search for this author

inPubMed Google Scholar * Siyuan Chen View author publications You can also search for this author inPubMed Google Scholar * Miao Pang View author publications You can also search for this

author inPubMed Google Scholar * Bo Yan View author publications You can also search for this author inPubMed Google Scholar CONTRIBUTIONS B.Y. and W.T. supervised the research. B.Y.

conceived the technique. Y.S. implemented the algorithm. Y.S. and W.T. designed the validation experiments. Y.S. trained the network and performed the validation experiments. Y.S. and W.T.

analysed the validation results. Z.G. verified the code. R.H. provided technical support on the implementation of the web page. Z.G., M.P. and S.C. collected the public datasets. Y.S., W.T.

and B.Y. wrote the paper. CORRESPONDING AUTHOR Correspondence to Bo Yan. ETHICS DECLARATIONS COMPETING INTERESTS The authors declare no competing interests. PEER REVIEW PEER REVIEW

INFORMATION _Nature Biomedical Engineering_ thanks Pearse Keane and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. ADDITIONAL INFORMATION

PUBLISHER’S NOTE Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. EXTENDED DATA EXTENDED DATA FIG. 1 PERFORMANCE (AUPR)

IN DOWNSTREAM TASKS. A, Internal evaluation. We fine-tuned the pretrained models on nine public datasets across four downstream tasks: diabetic retinopathy grading, glaucoma diagnosis,

age-related macular degeneration (AMD) grading and multi diseases classification. Compared to RETFound, RETFound-DE achieves superior performance on six datasets (P < 0.05) and comparable

performance on the other three datasets (P > 0.05). B, External evaluation. Models are fine-tuned on one diabetic retinopathy grading dataset and evaluated on the others. RETFound-DE

outperforms RETFound when fine-tuned on APTOS-2019 and evaluated on IDRID, or when fine-tuned on IDRID and evaluated on MESSIDOR-2. We present the mean value of AUPR on each bar and the

error bars show 95% confidence intervals. P-value was calculated with the two-sided t-test and listed in the figure. EXTENDED DATA FIG. 2 CONFUSION MATRICES COMPARISON IN DOWNSTREAM TASKS.

The confusion matrices shows the comparison between the predicted classes and the actual labels by the models, with each element of the matrix representing the prediction distribution for a

specific class. RETFound-DE’s diagonal prediction scores are generally higher than SSL-ImageNet, and even higher than RETFound, indicating that it classifies each class more accurately in

every task and has a lower misclassification rate. EXTENDED DATA FIG. 3 LABEL AND TRAINING TIME EFFICIENCY (AUPR). A, Label efficiency. Label efficiency refers to the amount of training data

and labels to achieve the target performance for a deep learning networks. RETFound-DE and RETFound show superior label efficiency than SSL-ImageNet. RETFound-DE even outperforms RETFound

on Retina dataset. B, Training time efficiency. Training time efficiency refers to the fine-tuning time to achieve the target performance for foundation models when adapted to downstream

datasets. RETFound-DE enables same performance as RETFound in less training time on several datasets, such as IDRID and Retina. The dashed grey lines highlight the significant difference

between RETFound and RETFound-DE. EXTENDED DATA FIG. 4 PERFORMANCE AND PRETRAINING TIME COMPARISONS OF SSL-IMAGENET-RETINAL AND RETFOUND-DE (AUPR). A, The effect of synthetic data on using

different number of real data for pretraining. The efficacy of SSL-ImageNet-Retinal progressively enhances on four downstream tasks as the quantity of real retinal images used for

pretraining increases. By pretraining on generated images, RETFound-DE shows a significant performance improvement than SSL-ImageNet-Retinal. On IDRID, MESSIDOR-2 and Retina datasets,

RETFound-DE outperforms RETFound when pretrained on only 40k real retinal images. B, The performance of RETFound-DE and SSL-ImageNet-Retinal (150k) over a matched computational time from 5

to 6 8-A100 days. We use 8-A100 day as a unit to denote the pretraining time of using 8 NVIDIA A100 GPUs for one day. For both models, the pretraining dataset at this time period is 150k

real retinal image dataset. RETFound-DE consistently outperforms SSL-ImageNet-Retinal (150k) on all four downstream datasets within the same pretraining time. EXTENDED DATA FIG. 5 INTERNAL

AND EXTERNAL EVALUATION ON CHEST X-RAY IMAGES. A, Internal evaluation. CXRFM and CXRFM-DE perform comparably on the ShenzhenCXR dataset and significantly outperformed SSL-ImageNet. On the

TBChest dataset, all three models achieved similar performance, with an AUROC exceeding 0.99. B, External evaluation. When fine-tuned on TBChest and evaluated on ShenzhenCXR, CXRFM and

CXRFM-DE perform similarly, both substantially surpassing SSL-ImageNet. When fine-tuned on ShenzhenCXR and evaluated on TBChest, CXRFM outperforms CXRFM-DE. C, CXRFM-DE (denoted as ‘With

synthetic data’) significantly outperforms SSL-ImageNet-Chest (20k) (denoted as ‘Without synthetic data’) under both conditions, demonstrating the enhancement in generalization brought about

by synthetic data. EXTENDED DATA FIG. 6 FEATURE DISTRIBUTION OF REAL AND SYNTHETIC DATASETS IN TERMS OF AGE AND GENDER. We use histograms and cumulative distribution functions to illustrate

the feature distributions of real data and synthetic data. (A) and (B) show that the features distribution of real and synthetic data for the 0<Age<60 and Age>60 groups. (C) and

(D) show that the features distribution of real and synthetic data for female and male. The results show the consistency of feature distribution between real and synthetic datasets in terms

of age and gender. SUPPLEMENTARY INFORMATION SUPPLEMENTARY INFORMATION Supplementary notes, figures and captions for the supplementary tables. REPORTING SUMMARY SUPPLEMENTARY TABLES

Supplementary Tables 1–9. RIGHTS AND PERMISSIONS Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with

the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable

law. Reprints and permissions ABOUT THIS ARTICLE CITE THIS ARTICLE Sun, Y., Tan, W., Gu, Z. _et al._ A data-efficient strategy for building high-performing medical foundation models. _Nat.

Biomed. Eng_ 9, 539–551 (2025). https://doi.org/10.1038/s41551-025-01365-0 Download citation * Received: 10 January 2024 * Accepted: 04 February 2025 * Published: 05 March 2025 * Issue Date:

April 2025 * DOI: https://doi.org/10.1038/s41551-025-01365-0 SHARE THIS ARTICLE Anyone you share the following link with will be able to read this content: Get shareable link Sorry, a

shareable link is not currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing initiative