- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

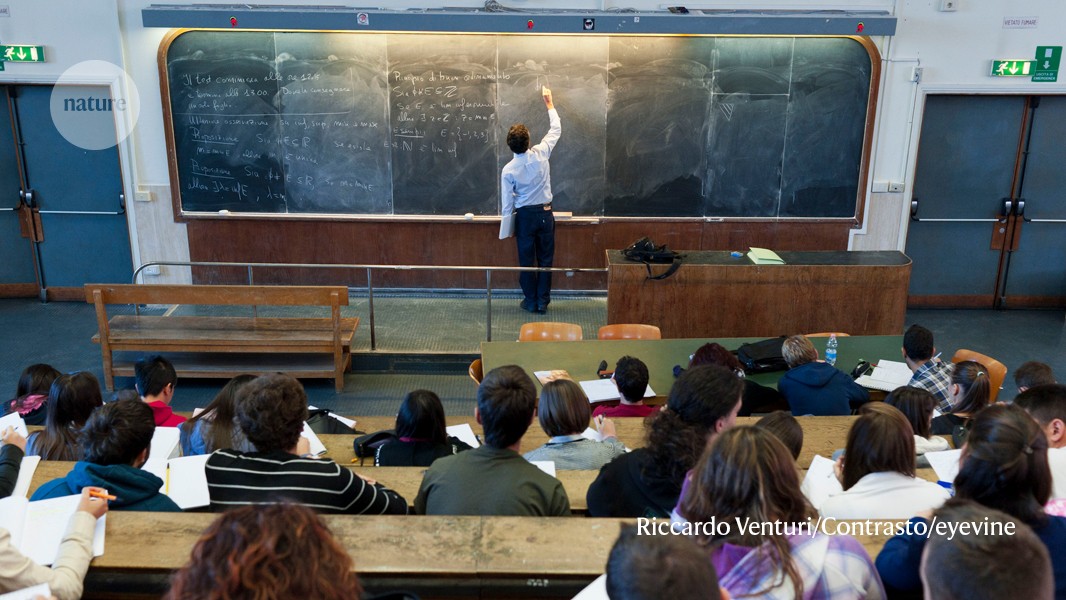

Last month, educational psychologist Ronald Beghetto asked a group of graduate students and teaching professionals to discuss their work in an unusual way. As well as talking to each other,

they conversed with a collection of creativity-focused chatbots that Beghetto had designed and that will soon be hosted on a platform run by his institute, Arizona State University (ASU).

The bots are based on the same artificial-intelligence (AI) technology that powers the famous and conversationally fluent ChatGPT. Beghetto prompts the bots to take on various personas to

encourage creativity — for example, by deliberately challenging someone’s assumptions. One student discussed various dissertation topics with the chatbots. Lecturers talked about how to

design classes. The feedback was overwhelmingly positive. One participant said that they had previously tried to use ChatGPT to support learning but had not found it useful — unlike

Beghetto’s chatbots. Another asked: “When are these things going to be available?” The bots helped participants to generate more possibilities than they would have considered otherwise. Why

teachers should explore ChatGPT’s potential — despite the risks Many educators fear that the rise of ChatGPT will make it easier for students to cheat on assignments. Yet Beghetto, who is

based in Tempe, and others are exploring the potential of large language models (LLMs), such as ChatGPT, as tools to enhance education. Using LLMs to read and summarize large stretches of

text could save students and teachers time and help them to instead focus on discussion and learning. ChatGPT’s ability to lucidly discuss nearly any topic raises the prospect of using LLMs

to create a personalized, conversational educational experience. Some educators see them as potential ‘thought partners’ that might cost less than a human tutor and — unlike people — are

always available. “One-on-one tutoring is the single most effective intervention for teaching, but it’s very expensive and not scalable,” says Theodore Gray, co-founder of Wolfram Research,

a technology company in Champaign, Illinois. “People have tried software, and it generally doesn’t work very well. There’s now a real possibility that one could make educational software

that works.” Gray told _Nature_ that Wolfram Research is currently working on an LLM-based tutor but gave few details. Such AI partners could be used to lead students through a problem step

by step, stimulate critical thinking or — as in the case of Beghetto’s experiment — enhance users’ creativity and broaden the possibilities being considered. Jules White, director of the

Initiative on the Future of Learning and Generative AI at Vanderbilt University in Nashville, Tennessee, calls ChatGPT “an exoskeleton for the mind”. THE RISKS ARE REAL Since California firm

OpenAI launched ChatGPT in November 2022, much of the attention regarding its use in education has been negative. LLMs work by learning how words and phrases relate to each other from

training data containing billions of examples. In response to user prompts, they then produce sentences, including the answer to an assignment question, and even whole essays. Unlike

previous AI systems, ChatGPT’s answers are often well written and seemingly well researched. This raises concerns that students will simply be able to get ChatGPT to do their homework for

them, or at least that they might become reliant on a chatbot to get quick answers, without understanding the rationale. ChatGPT could also lead students astray. Despite excelling in a host

of business, legal and academic exams1, the bot is notoriously brittle, getting things wrong if a question is phrased slightly differently, and it even makes things up, an issue known as

hallucination. Wei Wang, a computer scientist at the University of California, Los Angeles, found that GPT-3.5 — which powers the free version of ChatGPT — and its successor, GPT-4, got a

lot wrong when tested on questions in physics, chemistry, computer science and mathematics taken from university-level textbooks and exams2. Wang and her colleagues experimented with

different ways to query the two GPT bots. They found that the best method used GPT-4, and that its bot could answer around one-third of the textbook questions correctly that way (see ‘AI’s

textbook errors’), although it scored 80% in one exam. Privacy is another hurdle: students might be put off working regularly with LLMs once they realize that everything they type into them

is being stored by OpenAI and might be used to train the models. EMBRACING LLMS But despite the challenges, some researchers, educators and companies see huge potential in ChatGPT and its

underlying LLM technology. Like Beghetto and Wolfram Research, they are now experimenting with how best to use LLMs in education. Some use alternatives to ChatGPT, some find ways to minimize

inaccuracies and hallucinations, and some improve the LLMs’ subject-specific knowledge. “Are there positive uses?” asks Collin Lynch, a computer scientist at North Carolina State University

in Raleigh who specializes in educational systems. “Absolutely. Are there risks? There are huge risks and concerns. But I think there are ways to mitigate those.” Society needs to help

students to understand LLMs’ strengths and risks, rather than just forbidding them to use the technology, says Sobhi Tawil, director of the future of learning and innovation at UNESCO, the

United Nations’ agency for education, in Paris. In September, UNESCO published a report entitled _Guidance for Generative AI in Education and Research_. One of its key recommendations is

that educational institutions validate tools such as ChatGPT before using them to support learning3. Companies are marketing commercial assistants, such as MagicSchool and Eduaide, that are

based on OpenAI’s LLM technology and help schoolteachers to plan lesson activities and assess students’ work. Academics have produced other tools, such as PyrEval4, created by computer

scientist Rebecca Passonneau’s team at Pennsylvania State University in State College, to read essays and extract the key ideas. With help from educational psychologist Sadhana Puntambekar

at the University of Wisconsin–Madison, PyrEval has scored physics essays5 written during science classes by around 2,000 middle-school students a year for the past three years. The essays

are not given conventional grades, but PyrEval enables teachers to quickly check whether assignments include key themes and to provide feedback during the class itself, something that would

otherwise be impossible, says Puntambekar. PyrEval’s scores also help students to reflect on their work: if the AI doesn’t detect a theme that the student thought they had included, it could

indicate that the idea needs to be explained more clearly or that they made small conceptual or grammatical errors, she says. The team is now asking ChatGPT and other LLMs to do the same

task and is comparing the results. INTRODUCING THE AI TUTOR Other organizations use AI to help students directly. That’s the approach of what is perhaps the most widely used LLM-based

education tool other than ChatGPT itself; the AI tutor and teaching assistant Khanmigo. The tool is the result of a partnership between OpenAI and education non-profit organization Khan

Academy in Mountain View, California. Using GPT-4, Khanmigo offers students tips as they work through an exercise, saving teachers time. Khanmigo works differently from ChatGPT. It appears

as a pop-up chatbot on a student’s computer screen. Students can discuss the problem that they are working on with it. The tool automatically adds a prompt before it sends the student’s

query to GPT-4, instructing the bot not to give away answers and instead to ask lots of questions. AI bot ChatGPT writes smart essays — should professors worry? Kristen DiCerbo, the

academy’s chief learning officer, calls this process a “productive struggle”. But she acknowledges that Khanmigo is still in a pilot phase and that there is a fine line between a question

that aids learning and one that’s so difficult that it makes students give up. “The trick is to figure out where that line is,” she says. Khanmigo was first introduced in March, and more

than 28,000 US teachers and 11–18-year-old students are piloting the AI assistant this school year, according to Khan Academy. Users include private subscribers as well as more than 30

school districts. Individuals pay US$99 a year to cover the computing costs of LLMs, and school districts pay $60 a year per student for access. To protect student privacy, OpenAI has agreed

not to use Khanmigo data for training. But whether Khanmigo can truly revolutionize education is still unclear. LLMs are trained to include only the next most likely word in a sentence, not

to check facts. They therefore sometimes get things wrong. To improve its accuracy, the prompt that Khanmigo sends to GPT-4 now includes the right answers for guidance, says DiCerbo. It

still makes mistakes, however, and Khan Academy asks users to let the organization know when it does. Lynch says Khanmigo seems to be doing well. But he cautions: “I haven’t seen a clear

validation yet.” More generally, Lynch stresses that it’s crucial that any chatbot used in education is carefully checked for its tone, as well as accuracy — and that it does not insult or

belittle students, or make them feel lost. “Emotion is key to learning. You can legitimately destroy somebody’s interest in learning by helping them in a bad way,” Lynch says. DiCerbo notes

that Khanmigo responds differently to each student in each situation, which she hopes makes the bot more engaging than previous tutoring systems. Khan Academy expects to share its research

on Khanmigo’s efficacy in late 2024 or early 2025. Other tutoring companies are offering LLMs as assistants for students or are experimenting with them. The education technology firm Chegg

in Santa Clara, California, launched an assistant based on GPT-4 in April. And TAL Education Group, a Chinese tutoring company based in Beijing, has created an LLM called MathGPT that it

claims is more accurate than GPT-4 at answering maths-specific questions. MathGPT also aims to help students by explaining how to solve problems. AUGMENTING RETRIEVAL ENJOYING OUR LATEST

CONTENT? LOGIN OR CREATE AN ACCOUNT TO CONTINUE * Access the most recent journalism from Nature's award-winning team * Explore the latest features & opinion covering groundbreaking

research Access through your institution or Sign in or create an account Continue with Google Continue with ORCiD