- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

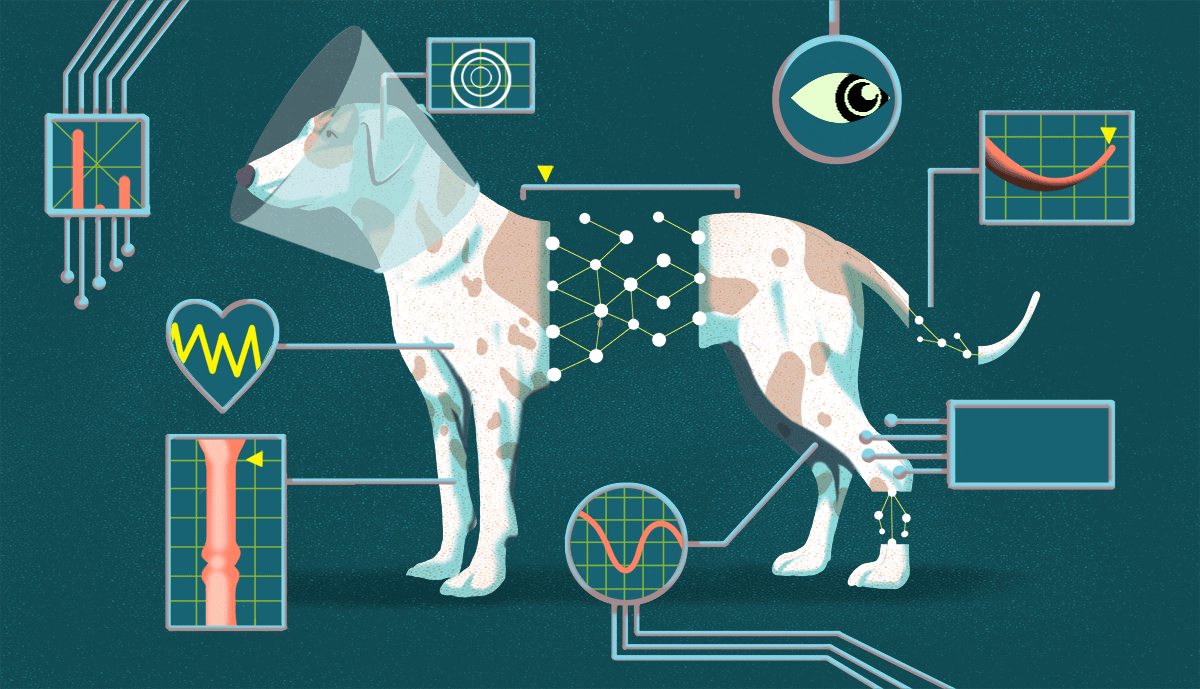

Goldstein says AI may mean a cheaper vet bill in some instances (he gives the example of AI instead of a human reviewing a urinalysis), but prices vary vet to vet, and each situation is

different, so it’s not fair to say to your vet, “It’s AI, so it should be cheaper.” “The cost compared to having a human expert review, for instance, the urine will be much less with AI. … I

think you could say it’s not more expensive or it could be less expensive, but every veterinarian prices things differently,” he says. IS THERE A DOWNSIDE? AI’s biggest risk is low quality,

Reagan and Goldstein agree. If you teach a human worker to do their job using false or incomplete information, they’re going to do their job poorly. The same is true with computer

algorithms. “You really have to understand what data was used to train [AI products]. Because if the data is messy or mislabeled or has bias in it, then it’s going to be very difficult to

trust the output,” says Reagan, who recalls an AI tool that purported to be able to diagnose skin cancer. It was trained using pictures of skin tumors taken by dermatologists, who typically

measure malignant tumors with a ruler. “Because dermatologists are highly skilled, they can differentiate malignant tumors really well. So if they thought the tumor was benign, they didn’t

bother with the ruler. When they took this dataset and used it to train an AI model, the AI model worked just as well as dermatologists. But what the AI model was picking up on was the ruler

— ‘ruler’ equals ‘malignant.’ So if you’re at home and you download this app and take a picture of your skin tumor, it’s going to default to benign.” Therein lies the rub: AI is really good

at recognizing patterns — as long as humans teach it the right patterns. For that reason, veterinarians considering AI-based solutions should ask vendors for information about how their

algorithms were trained, as well as independent research or peer-reviewed studies. “You need to be comfortable with the quality of the algorithms, the way the algorithms were trained and how

the algorithms were validated,” Goldstein says. Veterinarians should look for evidence that AI tools were created through a collaboration of computer scientists with veterinarians, Reagan

says — otherwise, developers are just guessing and could miss important nuances apparent only to veterinary experts. PET PARENTS SHOULD ASK QUESTIONS Pet owners should ask whether and how

their vet uses AI. If AI is used, they should ask their vet the same questions their vet should ask AI vendors. “If a veterinarian uses AI to perform a diagnostic test, a pet owner should be

able to ask, ‘How do I know the result is good?’ And the veterinarian should be able to answer that question,” Goldstein says. If your veterinarian uses AI for clinical purposes, your

pet’s medical records should say so, and your vet should make that information available to you, Reagan says. Although it’s good for pet owners to learn about AI, the nuts and bolts can be

complicated. The most important thing pet owners can do is find a veterinarian they trust, Tancredi stresses. If you trust your veterinarian, he says, you should be able to trust the tools

they use. Video: These Two-Legged Dogs Comfort Hospital Patients