- Select a language for the TTS:

- UK English Female

- UK English Male

- US English Female

- US English Male

- Australian Female

- Australian Male

- Language selected: (auto detect) - EN

Play all audios:

ABSTRACT Chronic Obstructive Pulmonary Disease (COPD) is a prevalent chronic pulmonary condition that affects hundreds of millions of people all over the world. Many COPD patients got

readmitted to hospital within 30 days after discharge due to various reasons. Such readmission can usually be avoided if additional attention is paid to patients with high readmission risk

and appropriate actions are taken. This makes early prediction of the hospital readmission risk an important problem. The goal of this paper is to conduct a systematic study on developing

different types of machine learning models, including both deep and non-deep ones, for predicting the readmission risk of COPD patients. We evaluate those different approaches on a real

world database containing the medical claims of 111,992 patients from the Geisinger Health System from January 2004 to September 2015. The patient features we build the machine learning

models upon include both knowledge-driven ones, which are the features extracted according to clinical knowledge potentially related to COPD readmission, and data-driven features, which are

extracted from the patient data themselves. Our analysis showed that the prediction performance in terms of Area Under the receiver operating characteristic (ROC) Curve (AUC) can be improved

from around 0.60 using knowledge-driven features, to 0.653 by combining both knowledge-driven and data-driven features, based on the one-year claims history before discharge. Moreover, we

also demonstrate that the complex deep learning models in this case cannot really improve the prediction performance, with the best AUC around 0.65. SIMILAR CONTENT BEING VIEWED BY OTHERS

MODELLING 30-DAY HOSPITAL READMISSION AFTER DISCHARGE FOR COPD PATIENTS BASED ON ELECTRONIC HEALTH RECORDS Article Open access 10 April 2023 MACHINE LEARNING FOR PATIENT RISK STRATIFICATION:

STANDING ON, OR LOOKING OVER, THE SHOULDERS OF CLINICIANS? Article Open access 30 March 2021 COMPARISON OF DEEP LEARNING WITH TRADITIONAL MODELS TO PREDICT PREVENTABLE ACUTE CARE USE AND

SPENDING AMONG HEART FAILURE PATIENTS Article Open access 13 January 2021 INTRODUCTION Chronic Obstructive Pulmonary Disease (COPD) is one type of obstructive lung disease makes people

difficult to breathe. The Global Burden of Disease Study reports a prevalence of 251 million cases of COPD globally in 2016, and it is estimated that 3.17 million global deaths were caused

by the disease in 20151. In US it was reported that 21% of the COPD patients got readmitted 30 days after discharge and the cost for these readmissions is 18% higher than those for initial

hospital stays2. The Centers for Medicare and Medicaid Services (CMS) has set COPD as one of their important target diseases for designing policies to reduce readmissions because of this

high prevalence and cost. According to Purdy _et al_.3, COPD is an ambulatory care sensitive condition where hospital admission could be avoided by effective interventions in primary or

preventative care. The risk factors for COPD readmission remain largely unknown. Retrospective4 and prospective5 studies have been conducted to investigate COPD readmissions. In recent

years, because of the rapid development of computer software and hardware technologies and wide adoption of electronic medical data systems, more and more health related data such as

Electronic Health Records (EHR) and medical claims are becoming readily available. Many computational models have been developed based on these data for predicting the risk of hospital

readmission. The LACE index6 uses four variables (L ength of stay (L), A cuity of the admission (A), C omorbidity of the patient (C) and E mergency department use in the duration of 6 months

before admission (E)) to predict the risk of death or nonelective 30-day readmission after hospital discharge among both medical and surgical patients. Similarly, the HOSPITAL score7 uses 7

clinical predictors (which are available in patient EHRs) to identify patients at high risk of potentially avoidable hospital readmission within 30 days. Researchers have also explored pure

data-driven machine learning approaches for this problem. For example, Hosseinzadeh _et al_.8 investigated the predictability of hospital readmission using classical machine learning

methods (e.g., naïve Bayes and decision trees) using the claims data from the provincial hospital system in Quebec, Canada. Cauruana _et al_.9 applied generalized additive model to predict

the hospital readmission risk of a general cohort with around 400,000 patients, where each patient is represented as a vector of about 4,000 dimensions. Sushmita _et al_.10 studied the

prediction of all-cause hospital readmission with machine learning methods (support vector machine, decision trees, random forests and generalized boosting machine) using the admission data

of patients provided by a large hospital chain in the Northwestern United States. These studies have demonstrated the better potential of machine learning models for hospital readmission

prediction comparing to LACE and HOSPITAL score. Recently, deep learning11, as a specific type of machine learning models, has attracted attentions of researchers in various fields (e.g.,

computer vision, speech analysis and natural language processing) because of their superior performance. Researchers have also explored the potential of deep learning approaches in hospital

readmission prediction. For example, Wang _et al_.12 developed a cost-sensitive deep learning approach combining Convolutional Neural Network (CNN)13 and Multi-Layer Perceptron (MLP)14 for

readmission prediction. Xiao _et al_.15 adapted the TopicRNN approach16, which combines probabilistic topic modeling17 and Recurrent Neural Network (RNN)18 to better capture long-term

dependencies in sequences, to predict the readmission risk of heart failure patients. Rajkomar _et al_.19 also developed an approach that ensembles three deep learning models to predict the

risk of 30-day unplanned readmission. Despite the initial success, so far there is no comprehensive and systematic investigation on the potential of machine learning models for hospital

readmission risk prediction. The goal of this paper is to conduct such a study on COPD patients using their longitudinal claims records. The output of our model is the probability that each

patient will be readmitted within 30 days at the time of discharge. We comprehensively examined the performance of traditional machine learning models including logistic regression and

variants, random forest, Support Vector Machine (SVM) and Gradient Boosting Decision Tree, as well as deep learning models including MLP, CNN, RNN and variants, using both knowledge and data

driven patient features. METHODS AND MATERIALS This paper aims at conducting a systematic comparative study on the performance of different machine learning models for predicting the

hospital readmission risk of COPD patients. Here we characterize a machine learning model as either traditional (non-deep) or deep. A traditional model is typically composed of two major

steps, feature engineering20 and model building21. Feature engineering extracts “good” features from the data that are effective for the model building step. Different from traditional

methods, a deep learning model11 enjoys an end-to-end learning mechanism, where the feature engineering part is implicitly integrated into the learning pipeline. In the following we

introduce these two types of approaches formally. TRADITIONAL METHODS As we introduced above, there are two major steps in traditional methods: feature engineering and model building.

FEATURE ENGINEERING Our goal is to predict the risk of hospital readmission, which is defined as a readmission to hospital within 30 days of a prior hospital discharge. Therefore, the

prediction is made on the day of hospital discharge. Patient features can be constructed from the medical history prior to the discharge day. Here we categorize the patient features as

either knowledge- or data-driven. More specifically, we investigate the following knowledge - driven features. * HOSPITAL Score7. The original HOSPITAL score is aggregated from 7 features

from different subdomains, wherein 4 of them are available in our claims data, including the number of procedures performed during hospital stay (HOS_Proc), the number of hospital admissions

during the previous year (HOS_NOAD), the number of hospital stays with >=5 days (HOS_LOS), and the index admission type (HOS_Index). We use them as separate dimensions in the patient

representation. * LACE Index6. The LACE index is aggregated from 4 features, i.e. Length of stay (days) (L), Acute (emergent) admission (A), Charlson Comorbidity Index (C) and Number of ED

visits within six months (E). We use them as separate dimensions in the patient representation. * Handcrafted Features. In addition to HOSPITAL score and LACE index, we also picked 12

features that could be important to our task, including age, gender, length of stay (LOS), number of admissions in the previous year (NOA), total length of all stays in the previous year

(LOAS), number of all kinds of admissions (NOAA, including outpatient admissions), number of different types of index admissions (Index, Index_trans, Index_final, Readm, Readm_trans,

Readm_final). One limitation of our data is that some important patient features, such as the Global Initiative for Obstructive Lung Disease (GOLD) severity grade22, are not available,

therefore we cannot use them in the predictive modeling process. The other feature category is data-driven features, which includes the following four different types. * Diagnosis. The

patient diagnosis in our data is encoded with the International Classification of Diseases (ICD-9) codes. Considering the large number of distinct ICD-9 codes, we further investigated three

different grouping strategies: (1) First three digits of ICD-9; (2) Clinical Classifications Software (CCS) codes; (3) Hierarchical Condition Category (HCC) codes. * Procedures. The patient

procedure information is encoded with three different coding sources, i.e., CCS codes, Berenson-Eggers Type of Service (BETOS) codes, and revenue codes. * Pharmacy. The pharmacy/medication

information is encoded with National Drug Code (NDC), which we further mapped to the Generic Therapeutic Class (GTC) codes for the sake of dimensionality reduction. * Locations. We also

consider the location where the medical service is provided. For all four types of data-driven features, we construct the following representations through the analogy with natural language

processing23: * Bag-of-Words (BoW) representation, which counts the frequency of each feature in the feature construction time period. * boolean Bag-of-Words (bBoW), which just cares about

whether or not a specific feature appears in the feature construction time period. * Term Frequency-Inverse Document Frequency (TFIDF) normalization of the BoW representation23, which

suppresses the impact of highly prevalent features (which could be non-informative) by weighting the feature counts by the inverse of its popularity (counts) in all patients’ records. For

both knowledge- and data-driven features, we use either one year or full period before the hospital discharge date as the feature construction period (also called observation window). The

only exceptions are HOSPITAL score and LACE (which are defined over one year). Table 1 summarizes the dimensions of all features introduced above. In addition to the investigation of

different groups of features respectively in the predictive modeling process, we also combine multiple groups of features for training the models to see how they can boost the performance

MODEL BUILDING After the patient features are constructed, we will feed them into a machine learning model for readmission risk prediction. The following models are considered in this paper:

(1) Logistic regression and its variants (with \({\ell }_{1}\) or \({\ell }_{2}\) norm regularizations); (2) Random F orest; (3) Support Vector Machine (SVM)24, where we only consider the

linear case; (4) Gradient Boosting Decision Tree (GBDT)25; (5) Multi-Layer Perceptron (MLP)14. We introduce more details of these models below. * Logistic Regression (LR). Logistic

regression is a popular model in applied health service research. It can be used to explain the relationship between one dependent binary variable and one or more independent variables.

Mathematically, we model the probability logit (which is the log-odds) of the probability of an event, as a linear combination of predictive variables, i.e.,

\(logit(p(y=\mathrm{1|}{\bf{x}};{\bf{w}}))={{\bf{w}}}^{T}{\bf{x}}\), where \(logit(p)=\,\mathrm{log}(\frac{p}{1-p})\). The regression coefficients W are usually estimated through the maximum

likelihood estimation (MLE) procedure, which is equivalent to minimize the negative total data log-likelihood as \({\bf{w}}={\rm{\arg }}\,{{\rm{\min }}}_{{\bf{w}}}-{\sum

}_{i}^{N}\,\mathrm{log}\,p({y}_{i}|{{\bf{x}}}_{i};{\bf{w}})\). * Logistic Regression with \({\ell }_{1}\) penalty (LR_l1). Sometimes the number of independent variables is large, in which

case not every of them is useful. In order to promote model sparsity and pick out variables that really contribute to the prediction, we can add \({\ell }_{1}\) regularization to the

negative total data log-likelihood26, that is, \({\bf{w}}={\rm{a}}{\rm{r}}{\rm{g}}\,{{\rm{\min }}}_{{\bf{w}}}-{\sum }_{i}^{N}\,\mathrm{log}\,p({y}_{i}|{{\bf{x}}}_{i};{\bf{w}})+\beta

||{\bf{w}}{||}_{1}\). _β_ > 0 is the tradeoff parameter. * Logistic Regression with \({\ell }_{2}\) penalty (LR_l2). We can also add \({\ell }_{2}\) regularization to the negative total

data log-likelihood to improve numerical stability in the parameter estimation process, i.e., \({\bf{w}}={\rm{\arg }}\,{{\rm{\min }}}_{{\bf{w}}}-{\sum

}_{i}^{N}\,\mathrm{log}\,p({y}_{i}|{{\bf{x}}}_{i};{\bf{w}})+\beta ||{\bf{w}}{||}_{2}\). _β_ > 0 is the tradeoff parameter. * Random Forest (RF)27. Random forest is an ensemble learning

method, which constructs multiple decision trees (each on a randomly sampled feature set) at the training stage. Their outputs will be aggregated in the prediction stage (usually through

majority voting) as the final result. * Support Vector Machine (SVM)24. SVM is a discriminative classifier which constructs a hyperplane to separate the two classes with the maximum margin.

In particular, solves the following optimization problem \({\bf{w}}={\rm{\arg }}\,{{\rm{\min }}}_{{\bf{w}}}\,{\sum }_{1}^{N}\,{\rm{\max

}}\,\mathrm{(0,1}-{y}_{i}({{\bf{w}}}^{T}{{\bf{x}}}_{i}-b))+\lambda ||{\bf{w}}{||}_{2}\), where W is the separation hyperplane. * Gradient Boosting Decision Tree (GBDT)25. Gradient boosting

is an ensemble model comprising of a set of weak learners obtained in a stage-wise fashion through the minimization of some differentiable prediction loss using functional gradient descent.

For GDBT those weak learners are set to be decision trees. * Multi-layer Perceptron (MLP)28. Multi-layer perceptron is a class of feed-forward artificial neural network. It consists of

multiple hidden layers with nonlinear processing units, and is trained with the back-propagation technique. DEEP LEARNING METHODS One limitation of all traditional machine learning models we

introduced above is that they need to aggregate patient features in the observation window to form patient vectors. This ignores the temporality in patient records, which is usually

important in healthcare settings as it indicates the disease progression process. To explore such temporality, we construct a set of deep learning models, specifically Convolutional Neural

Networks (CNN)13, Recurrent Neural Networks (RNN)18 and their variants (e.g., Long-Short Term Memory (LSTM)29 and Gated Recurrent Unit (GRU)30). In addition, we further incorporate

contextual event embedding, time-sensitive modeling and attention mechanism into the model building process to enhance the model performance. The details are explained as follows. CONTEXTUAL

EVENT EMBEDDING If we concatenate the claim records for each patient according to their associated timestamps, we can obtain a medical event sequence for each patient. Contextual

embedding31 is a class of techniques that learn a vector based representation for each event in the sequence, such that each vector encodes the contextual information around its

corresponding event. Word2Vec32 is one representative contextual embedding technique that learns an embedded vector for each word in a document corpus (each document can be viewed as a word

sequence). Claims data can be analogous to the text data as they contain sequences of medical events, which play a similar role as words in texts. The difference is that each medical event

is associated with a concrete timestamp in claims data, which could be critical. For example, two medical events with one day and one year gap can have completely different meanings in

healthcare setting. Therefore we investigated the following variants of contextual embedding techniques. * 1. Using a time window instead of a context window to generate event contexts. * 2.

Weighting the event pairs according to the temporal gap between them. Higher weights will be given to temporally closer event pairs * 3. Med2Vec33, which is a contextual embedding technique

that is able to learn both event -level and visit-level representations for longitudinal patient records, where the temporal gap information is appended as an additional dimension in the

event/visit vectors. More details of these methods are provided in Supplementary Materials. In addition to these methods, we also implemented the one-hot embedding model as the baseline.

Specifically, let _V_ be the number of unique medical events, then the one-hot representation of an event is a _V_-dimensional binary vector with value 1 on the dimension corresponding to

the event and all other entries being 0. TIME FUSION IN DEEP MODELS In order to conveniently explore the event temporalities in patient claims, we investigated three types of patient

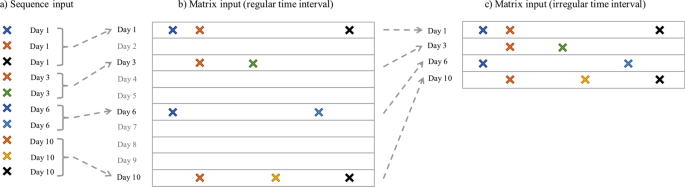

representations. * 1. _Sequence Representation_. We represent the records for each patient as two sequences, an event sequence and a timestamp sequence, and then treat the prediction problem

as a sequence classification problem. Specifically, let _V_ be the number of distinct medical events. For any specific patient, we have the event sequence \(\langle {c}_{1},{c}_{2},\cdots

,{c}_{L}\rangle \), and the the corresponding timestamp sequence \(\langle {t}_{1},{t}_{2},\cdots ,{t}_{L}\rangle \), where \({c}_{i}\in \mathrm{[1,}\,\mathrm{2,}\cdots ,V]\), and

\({t}_{1}\le {t}_{2}\le \cdots \le {t}_{L}\). We can apply the contextual event embedding techniques introduced above to embed each event _c__i_ as a vector W_i_, then the event sequence

becomes the vector sequence \({{\bf{w}}}_{1},{{\bf{w}}}_{2},\cdots ,{{\bf{w}}}_{L}\). Then we can incorporate time information using a time weighting layer. Given the timestamp sequence, we

can get a temporal weight \({d}_{i}\propto softmax(\lambda \cdot {\rm{\Delta }}{t}_{i})\), where Δ_t__i_ is the temporal gap between _t__i_ and the hospital discharge date when the

prediction is made on, _λ_ is a time scaling parameter to be learned in the training phase. * 2. _Matrix Representation with Regular Time Intervals (MR-RTI)_. In this case, we represent the

claims records of each patient as a longitudinal matrix similar to what Wang _et al_.34 did. The columns correspond to different medical events, so there are _V_ columns in total. The rows

correspond to regular time intervals. For example, each row could represent a day, a week or a month, depending on the time resolution. The (_i_, _j_)-th entry of this matrix is 1, if the

_j_-th event is observed at the _i_-th timestamp in the patient’s claims, and 0 otherwise. * 3. _Matrix Representation with Irregular Time Intervals (MR-ITI)_. The MR-RTI representation

could be very sparse – if the patient did not pay visit to the clinic on a specific day then he/she will have an all-zero row in the matrix. The MR-ITI representation deletes these all-zero

rows in MR-RTI, which greatly reduced the matrix sparsity. However, because the time intervals are no longer regular, we also need to record the exact timestamp for each row in the matrix.

This is similar to the sequence representation. We summarize the three different patient representations in Fig. 1. ATTENTION MECHANISM In addition to the time weighting layer to incorporate

timestamp information, we can also apply attention mechanism on the event embeddings to emphasize more on the important medical events. The attention weight for event _c__i_ is computed

using a softmax function \({a}_{i}\propto softmax({\beta }^{T}{{\bf{w}}}_{i})\), where _β_ is a reference vector to be learned from the model training process, and W_i_ is the embedded

vector of _c__i_. This attention weight _a__i_ tells us how much attention we should pay on event _c__i_. We can multiply it with the time weight to get a composite weight for each event in

the modeling process. The overall architecture of the deep learning models we investigated is provided in Fig. 3 in the supplemental material. RESULTS The detailed experimental results are

presented in this section. First we introduce the process of data preprocessing. DATA PREPROCESSING Our raw data contain 111,992 patients in Geisinger Health System who had at least one COPD

related diagnosis (ICD-9 diagnosis codes: 490.**, 491.**, 492.**, 493.2*, 494.**, 496.**) between January 2004 and September 2015. The information contained in patient claims include

patient demographics, medication, service location (utilization), diagnosis and procedure. Table 1 in the supplemental material summarizes the details of each type of information. We built a

three-step pipeline for data preprocessing: data filtering, data labeling and data splitting, which are detailed below. DATA FILTERING We filter the raw patient claims with the following

criteria: (1) Keep Main Hospital (MH) claims with status ‘Approved’; (2) Keep patients who have ever been diagnosed with at least one of 491.*, 492.*, and 496.* in MH DX claims; (3) Keep

patients who are at least 40 years old; (4) Keep patients with decided gender; (5) Keep patients with at least one Inpatient MH claim in the entire history; (6) Keep patients with

observation history of at least 60 days; (7) Keep patients with at least one pharmacy claim in the entire history. The detailed patient information before and after each filtering criterion

can be found in the Supplementary Material. DATA LABELING In order to build the predictive model, we further label each patient hospital admission as either index admission or readmission.

Specifically, a hospital readmission is when a patient who had been discharged from a hospital is admitted again to the same or a different hospital within 30 days. The original hospital

admission is referred to as index admission, and the subsequent admission is referred to as readmission. We further have the following inclusion criteria for index admissions in our study. *

1. The patient has enrollment information for at least 30 days after the discharge. This is necessary to gaurantee that readmissions within 30 days can be tracked. * 2. The patient was

enrolled for 12 months prior to the index admission. This is necessary to gather adequate clinical information for accurate risk adjustment. One issue we need to deal with is hospital

transfer. A hospital transfer is the case in which a patient is discharged from a hospital and admitted to another hospital at the same day. Therefore, we have 6 classes of hospital

admissions in total: index admission, index transfer (the patient is transferred at the same day of the index admission), index final (this is the last stop of the transfer), readmission,

readmission transfer, and readmission final. The numbers of all 6 kinds of admissions are provided in the supplemental material. Finally, we get 67,771 index admissions (i.e, index and

index_final), among which 10,265 (15.15%) samples are followed by a 30-day readmission. There are 27,138 patients involved in these hospital stays. We summarize the statistical

characteristics of the overall samples in Table 2. DATA SPLITTING We apply five-fold cross validation on all 27,138 patients to evaluate the performance of the investigated approaches. Note

that we cannot apply five-fold cross validation on discharges, because if one patient has multiple discharges, it is possible that some of these discharges are in training set while some are

in validation set. This may produce overly optimistic performance due to label leaking. TRADITIONAL METHODS We implemented seven different traditional machine learning models with different

of feature sets as introduced in the Methods Section. The results are summarized below. KNOWLEDGE-DRIVEN FEATURES The prediction performance in terms of Area Under the r eceiver o perating

c haracteristic (ROC) Curve (AUC) with knowledge-driven features are shown in Fig. 2(a). These features are extracted from the one-year history prior to the discharge of the index admission.

We can observe that: * 1. The two baseline methods, HOSPITAL score and LACE index, have similar performance with AUC around 0.60, and HOSPITAL score is slightly better. * 2. Our handcrafted

features can produce better performance than the two baseline methods. * 3. The combined knowledge-driven features lead to the best performance, with the mean AUC of 0.643 using the GBDT

classifier. To better understand knowledge-driven features, we further investigate the trained logistic regression model. We record the coefficients of all predictors in Table 3. We can find

that older age, male gender, longer length of stay, and more admissions in previous year will increase the risk of readmission. It is interesting to notice that a larger number of

index_trans in the previous year will decrease the risk. The reason could be that more hospital transfers lead to better patient care. The LACE index features and HOSPITAL score features

have positive relationship with readmission risk, except HOS_Proc, HOS_index. DATA-DRIVEN FEATURES For data-driven features, we combine the grouped diagnosis, grouped procedure, grouped

pharmacy and location codes together to obtain the combined data-driven features, whose performances are summarized in Fig. 2(b). From the figure we can observe that the best mean AUC value

is around 0.646, which can be obtained from the BoW representation using GBDT classifier. We further explore how different types of data -driven features influence the prediction

performance. These features are extracted from the one-year history prior to the discharge date of the index admission. The results are shown in Fig. 3, from which we can observe that: * 1.

The grouped codes (e.g., diagnosis codes grouped by CCS) can produce better performances than the original raw codes. This is potentially due to the high dimensionality of the raw codes,

which results in highly sparse feature representations. Grouping the codes can greatly reduce the dimensionality and thus increase the density of the feature vector. * 2. Comparing with

other features, diagnosis and procedure are more useful to the readmission prediction task, while the pharmacy feature is not very informative. * 3. The GBDT classifier generally achieves

the best performance among the seven traditional classifiers for most of the features. THE EFFECT OF OBSERVATION WINDOW LENGTHS We also explored how the observation window length will affect

the readmission prediction performance. We compared the performance of one-year observation window against the full-history. All knowledge- and data-driven features are concatenated. The

results are summarized in Table 4, from which we can observe that: * 1. For the one-year observation window, we can obtain the best AUC of 0.653 using GBDT, which is better than knowledge-or

data-driven features alone. * 2. Increasing the observation window from one year to full history barely improves the performance of GBDT, while most of other models get obvious

improvements. DEEP LEARNING METHODS For deep learning experiments, we focus on the impact of different time fusion and embedding strategies. TIME FUSION STRATEGIES We compare the performance

of different time fusion methods in Fig. 4a, from which we can observe that: * 1. The basic sequence classification without considering time information generates the worst performance.

This means that considering the exact event timestamps can indeed improve the prediction performance. * 2. Matrix representation with regular time intervals performs better than sequence

representation. * 3. Matrix representation with irregular time interval combined with event attentions does not necessarily improve the prediction performance. * 4. If we use a coarse time

granularity, for example by week or month instead of by day in matrix representations, the prediction AUC can be improved. The best performance of AUC 0.650 is achieved by GRU model based on

matrix representation by month. EMBEDDING STRATEGIES We also explored the impact of different embedding strategies. We used the matrix representation with regular time intervals aggregated

by month. The performance of using different embedding strategies is summarized in Fig. 4(b), from which we do not observe significant differences across the performances of different

embedding strategies. DISCUSSIONS From our investigations above on the task of readmission risk prediction for COPD patients based on patient claims data, we have the following observations.

* 1. _Knowledge is powerful_. Similar to what has been observed in Rajkomar _et al_.19, simple models based on clinical knowledge, such as LACE and Hospital Score, work pretty well in

reality. We also expanded the knowledge-driven features used in these two models to a broader set (see the handcrafted features in Table 1), which can further improve the prediction

performance in terms of AUC (from 0.61 to 0.64). Comparing with data-driven features, those knowledge-driven features are highly interpretable and generalizable. * 2. _Data-driven features

are helpful_. With the data-driven features, we can improve the prediction performance (from 0.64 to 0.65). Combining the knowledge- and data-driven features leads to the best prediction

performance (around 0.653). * 3. _GDBT is powerful_. Comparing with other traditional machine learning models, GBDT can achieve better performance almost across all different experimental

settings, and it obtained the best performance with the combination of both knowledge- and data-driven features. * 4. _Longer history barely helps_. We do not observe much differences on the

prediction performance on patient records with one-year observation window or full-history. This observation also explains implicitly why only one year history was used in both LACE and

HOSPITAL Score models. * 5. _Deep learning barely helps_. We have systematically investigated the performance of various deep learning models, including the variants of CNN and RNN with

different representation, embedding and time-sensitive strategies. However, the best performance achieved among them is on par with the best performance of GDBT (around 0.65). The same

phenomenon is also observed in Rajkomar _et al_.19. With these observations, we can conclude that predicting the risk of hospital readmission is difficult based on only claims data. Machine

learning models can benefit when combining patient data with clinical knowledge. This is potentially be explained from the following aspects. * 1. Medicine has been a research discipline

with long history. The medical knowledge people accumulated from clinical practice are invaluable and powerful. * 2. Unlike other application domains such as computer vision and natural

language processing, where deep learning models have been shown to be very powerful, medical problems are much more complicated and with less available training samples. This means that it

is difficult to have a ‘sufficiently large’ patient dataset to train a very good machine learning model. In this case, incorporating domain knowledge into the model building process is of

vital importance, and complex models do not necessarily lead to better performance as they need even more training samples. * 3. The information contained in patient claims records may not

be sufficient for building good hospital readmission risk prediction models. Some important and relevant clinical features, such as GOLD severity grade, are not available. More comprehensive

and finer granular patient data, such as electronic health records, could be potentially more helpful. * 4. Our claims data lacks mortality information of patients. In fact, hospital

readmission risk and death risk are competing clinical risks, since patients that die after discharge cannot be readmitted, which makes the risk of readmission and death after discharge are

often negatively linked. However, there could be some common causes for both risks (e.g., condition exacerbation), which could confuse the predictive models. CONCLUSION We conducted a

comprehensive study on predictive modeling of the 30 day readmission risk of COPD patients based on their claims records with various machine learning models. We constructed both knowledge-

and data-driven features from the patients’ claim records to train the predictive models. Both traditional and modern machine learning models are investigated. The results showed that the

combination of both knowledge and data driven features can lead to the best prediction performance, and complicated models such as deep learning can barely improvement the performance. Our

studies verify the importance of medical knowledge in the predictive modeling process, as well as the demands for better patient data. REFERENCES * Chronic obstructive pulmonary disease

(copd). http://www.who.int/news-room/fact-sheets/detail/chronic-obstructive-pulmonary-disease-(copd) (2016). * Elixhauser, A. _et al_. Readmissions for chronic obstructive pulmonary disease.

_Rockville, MD: Agency for Heal. Care Res. Qual_. (2011). * Purdy, S., Griffin, T., Salisbury, C. & Sharp, D. Prioritizing ambulatory care sensitive hospital admissions in england for

research and intervention: a delphi exercise. _Prim. Heal. Care Res. & Dev._ 11, 41–50 (2010). Article Google Scholar * Harries, T. H. _et al_. Hospital readmissions for copd: a

retrospective longitudinal study. _NPJ primary care respiratory medicine_ 27, 31 (2017). Article Google Scholar * Garcia-Aymerich, J. _et al_. Risk factors of readmission to hospital for a

copd exacerbation: a prospective study. _Thorax_ 58, 100–105 (2003). Article CAS Google Scholar * vanWalraven, C. _et al_. Derivation and validation of an index to predict early death or

unplanned readmission after discharge from hospital to the community. _Can. Med. Assoc. J._ 182, 551–557 (2010). Article Google Scholar * Donzé, J., Aujesky, D., Williams, D. &

Schnipper, J. L. Potentially avoidable 30-day hospital readmissions in medical patients: derivation and validation of a prediction model. _JAMA internal medicine_ 173, 632–638 (2013).

Article Google Scholar * Hosseinzadeh, A., Izadi, M. T., Verma, A., Precup, D. & Buckeridge, D. L. Assessing the predictability of hospital readmission using machine learning. In _The

Twenty-Fifth Innovative Applications of Artificial Intelligence Conference_ (2013). * Caruana, R. _et al_. Intelligible models for healthcare: Predicting pneumonia risk and hospital 30-day

readmission. In _Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining_, 1721–1730 (ACM, 2015). * Sushmita, S. _et al_. Predicting 30-day risk

and cost of “all-cause” hospital readmissions. In _AAAI Workshop: Expanding the Boundaries of Health Informatics Using AI_ (2016). * LeCun, Y., Bengio, Y. & Hinton, G. Deep learning.

_nature_ 521, 436 (2015). Article CAS ADS Google Scholar * Wang, H. _et al_. Predicting hospital readmission via cost-sensitive deep learning. _IEEE/ACM Transactions on Comput. Biol.

Bioinforma_. (2018). * Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. In _Advances in neural information processing

systems_, 1097–1105 (2012). * Rosenblatt, F. Principles of neurodynamics. perceptrons and the theory of brain mechanisms. _Tech. Rep., CORNELL AERONAUTICAL LAB INC BUFFALO NY_ (1961). *

Xiao, C., Ma, T., Dieng, A. B., Blei, D. M. & Wang, F. Readmission prediction via deep contextual embedding of clinical concepts. _PloS one_ 13, e0195024 (2018). Article Google Scholar

* Dieng, A. B., Wang, C., Gao, J. & Paisley, J. TopicRNN: A recurrent neural network with long-range semantic dependency. _arXiv preprint arXiv:1611.01702_ (2016). * Blei, D. M.

Probabilistic topic models. _Commun. ACM_ 55, 77–84 (2012). Article Google Scholar * Mikolov, T., Karafiát, M., Burget, L., Černockỳ, J. & Khudanpur, S. Recurrent neural network based

language model. In _Eleventh Annual Conference of the International Speech Communication Association_ (2010). * Rajkomar, A. _et al_. Scalable and accurate deep learning with electronic

health records. NPJ Digit. _Medicine_ 1, 18 (2018). Google Scholar * Liu, H. & Motoda, H. _Feature extraction, construction and selection: A data mining perspective_, vol. 453 (Springer

Science & Business Media, 1998). * Michalski, R. S., Carbonell, J. G. & Mitchell, T. M. _Machine learning: An artificial intelligence approach_. (Springer Science & Business

Media, 2013). * Vestbo, J. _et al_. Global strategy for the diagnosis, management, and prevention of chronic obstructive pulmonary disease: Gold executive summary. _Am. journal respiratory

critical care medicine_ 187, 347–365 (2013). Article CAS Google Scholar * Manning, C. D., Manning, C. D. & Schütze, H. _Foundations of statistical natural language processing_. (MIT

press, 1999). * Cortes, C. & Vapnik, V. Support-vector networks. _Mach. learning_ 20, 273–297 (1995). MATH Google Scholar * Friedman, J. H. Greedy function approximation: a gradient

boosting machine. _Annals statistics_ 1189–1232 (2001). * Lee, S.-I., Lee, H., Abbeel, P. & Ng, A. Y. Efficient l˜ 1 regularized logistic regression. _In AAAI_ 6, 401–408 (2006). Google

Scholar * Breiman, L. Random forests. _Mach. learning_ 45, 5–32 (2001). Article Google Scholar * Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by

back-propagating errors. _nature_ 323, 533 (1986). Article ADS Google Scholar * Hochreiter, S. & Schmidhuber, J. Long short-term memory. _Neural computation_ 9, 1735–1780 (1997).

Article CAS Google Scholar * Cho, K. _et al_. Learning phrase representations using rnn encoder-decoder for statistical machine translation. _arXiv preprint arXiv:1406.1078_ (2014). *

Farhan, W. _et al_. A predictive model for medical events based on contextual embedding of temporal sequences. _JMIR medical informatics_ 4 (2016). * Mikolov, T., Chen, K., Corrado, G. &

Dean, J. Efficient estimation of word representations in vector space. _arXiv preprint arXiv:1301.3781_ (2013). * Choi, E. _et al_. Multi-layer representation learning for medical concepts.

In _Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining_, 1495–1504 (ACM, 2016). * Wang, F. _et al_. A framework for mining signatures from

event sequences and its applications in healthcare data. _IEEE transactions on pattern analysis machine intelligence_ 35, 272–285 (2013). Article Google Scholar Download references

ACKNOWLEDGEMENTS The work of F. W. is partially supported by NSF IIS-1716432 and NSF IIS-1750326. The authors would like to acknowledge Geisinger Health System for providing the data, and

Dr. Yang Jiang for the insightful feedbacks about the research. X. M. is also grateful for the valuable comments from Prof. Ting Chen, and the financial support from China Scholarship

Council (CSC). AUTHOR INFORMATION AUTHORS AND AFFILIATIONS * Department of Healthcare Policy and Research, Weill Cornell Medicine, New York, NY, USA Xu Min & Fei Wang * Department of

Computer Science and Technology, Institute for Artificial Intelligence, Tsinghua-Fuzhou Institute for Data Technology, and Bioinformatics Division, BNRist, Tsinghua University, Beijing,

China Xu Min * American Air Liquide, Newark, DE, USA Bin Yu Authors * Xu Min View author publications You can also search for this author inPubMed Google Scholar * Bin Yu View author

publications You can also search for this author inPubMed Google Scholar * Fei Wang View author publications You can also search for this author inPubMed Google Scholar CONTRIBUTIONS X.M.

and F.W. designed the approach. B.Y. prepared the data. X.M. conducted all the experiments and summarized the results. X.M. and F.W. wrote the paper. All authors polished the manuscript.

CORRESPONDING AUTHOR Correspondence to Fei Wang. ETHICS DECLARATIONS COMPETING INTERESTS The authors declare no competing interests. ADDITIONAL INFORMATION PUBLISHER’S NOTE: Springer Nature

remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. SUPPLEMENTARY INFORMATION SUPPLEMENTARY MATERIAL RIGHTS AND PERMISSIONS OPEN ACCESS

This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as

long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third

party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the

article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright

holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/. Reprints and permissions ABOUT THIS ARTICLE CITE THIS ARTICLE Min, X., Yu, B. & Wang, F.

Predictive Modeling of the Hospital Readmission Risk from Patients’ Claims Data Using Machine Learning: A Case Study on COPD. _Sci Rep_ 9, 2362 (2019).

https://doi.org/10.1038/s41598-019-39071-y Download citation * Received: 25 July 2018 * Accepted: 15 January 2019 * Published: 20 February 2019 * DOI:

https://doi.org/10.1038/s41598-019-39071-y SHARE THIS ARTICLE Anyone you share the following link with will be able to read this content: Get shareable link Sorry, a shareable link is not

currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing initiative